Create a Snowflake Connection in Airflow

Snowflake is a cloud data warehouse where you can store and analyze your data. Integrating Snowflake with Airflow allows you to do all of the following and more from a DAG:

- Run SQL

- Monitor the status of SQL queries

- Run a Snowpark Python function

- Load and export data to/from Snowflake

This guide provides the basic setup for creating a Snowflake connection. For a complete integration tutorial, see Orchestrate Snowflake Queries with Airflow. To run Snowpark queries in Airflow, see Orchestrate Snowpark Machine Learning Workflows with Apache Airflow.

Prerequisites

- The Astro CLI.

- A locally running Astro project.

- A Snowflake account.

Connection options

When creating a Snowflake connection in Airflow, you can choose from the following options:

- Private Key (recommended): Use key-pair authentication with Snowflake.

- Password: Use a username and password to authenticate with Snowflake. This method is only recommended for testing purposes.

Private Key authentication

A private key connection from Airflow to Snowflake requires the following information:

Base parameters:

conn_id: A unique name for the connection.conn_type:snowflake. Note that you need to install theapache-airflow-providers-snowflakeprovider package to use this connection type.login: The user you authenticate with. Note that some operators require the user to be properly capitalized.password: The passphrase of the private key. If your private key is not encrypted (not recommended), you can leave this field as an empty string.schema: The default schema for the connection, this can be overridden in the operator.

Parameters in the extra field:

account: The account identifier from your Snowflake account URL in the formatabc12345.warehouse: The default warehouse for this connection, this can be overridden in the operator.database: The default database for the connection, this can be overridden in the operator.region: The region identifier from your Snowflake account URL in the formatus-west-2. Note that for some regions, you might have to include the cloud provider identifier after the region name, see the Snowflake documentation on account identifiersrole: The role you want Airflow to have in Snowflake. Note that some operators require the user to be properly capitalized.private_key_content: The content of your private key file in the format"-----BEGIN ENCRYPTED PRIVATE KEY-----\nABC...ABC\nABC...ABC=\n-----END ENCRYPTED PRIVATE KEY-----\n",.private_key_file: alternatively toprivate_key_content, you can provide the path to your private key file.

Optional:

authenticator:snowflake(default). To connect using OAuth set this parameter oauth.refresh_token: The refresh token for OAuth authentication.session_parameters: A dictionary of session parameters to set for the connection.insecure_mode:false(default). Set totrueto disable OCSP certificate checks.

See the template below for a private key connection in JSON format:

AIRFLOW_CONN_SNOWFLAKE_DEFAULT='{

"conn_type":"snowflake",

"login":"<your user, properly capitalized>",

"password":"<your private key passphrase>",

"schema":"DEMO_SCHEMA",

"extra":{

"account":"<your account id in the form of abc12345",

"warehouse":"<your warehouse>",

"database":"DEMO_DB",

"region":"<your region>",

"role":"<your role, properly capitalized>",

"private_key_content":"-----BEGIN ENCRYPTED PRIVATE KEY-----\nABC...ABC\nABC...ABC=\n-----END ENCRYPTED PRIVATE KEY-----\n"

}'

Get connection details

Complete the following steps to retrieve the needed connection values:

- Snowsight

- Snowflake classic console

-

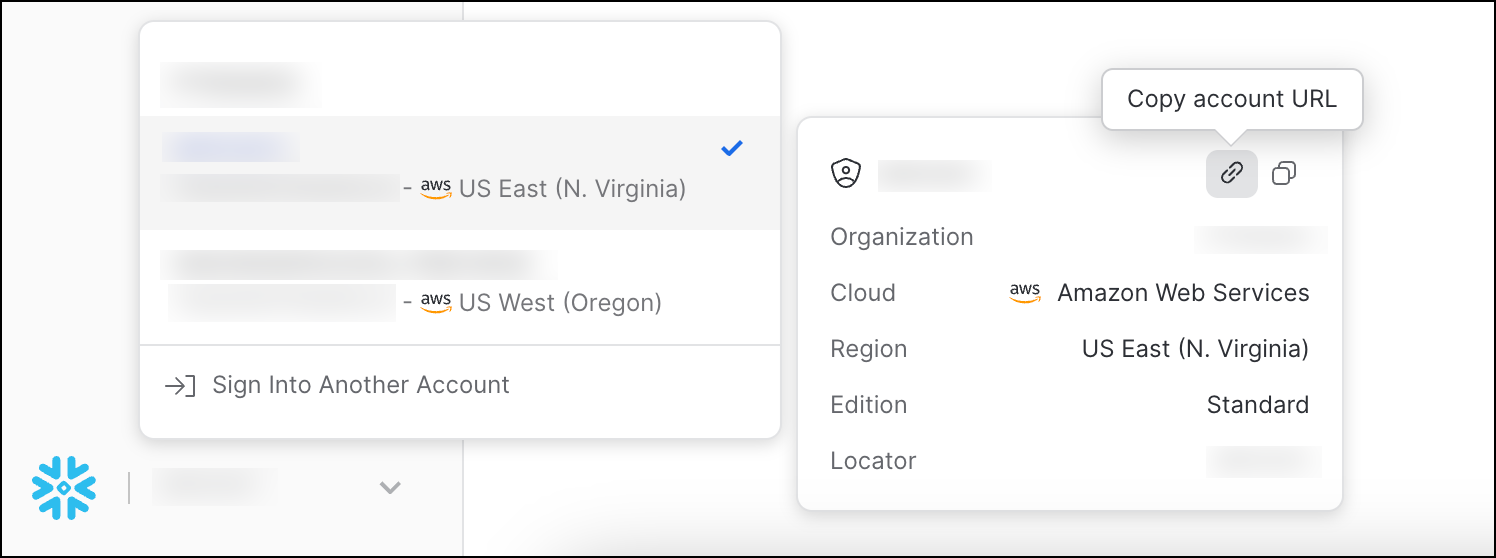

Open Snowsight. Follow the Snowflake documentation to open the account selector at the end of the left nav. Hover over your account to see more details, then click the Copy URL icon to copy the account URL. The URL has a similar format to

https://<account-identifier>.<region>.snowflakecomputing.com/. Copy<account-identifier>and<region>from the URL.

When you copy your region, you might have to additionally copy the cloud provider identifier after the region name for some GCP and some AWS regions. For example, if your account URL is https://ZS86751.europe-west4.gcp.snowflakecomputing.com, then your region will be europe-west4.gcp. See Account identifiers to learn more about Snowflake's account types and their identifiers.

-

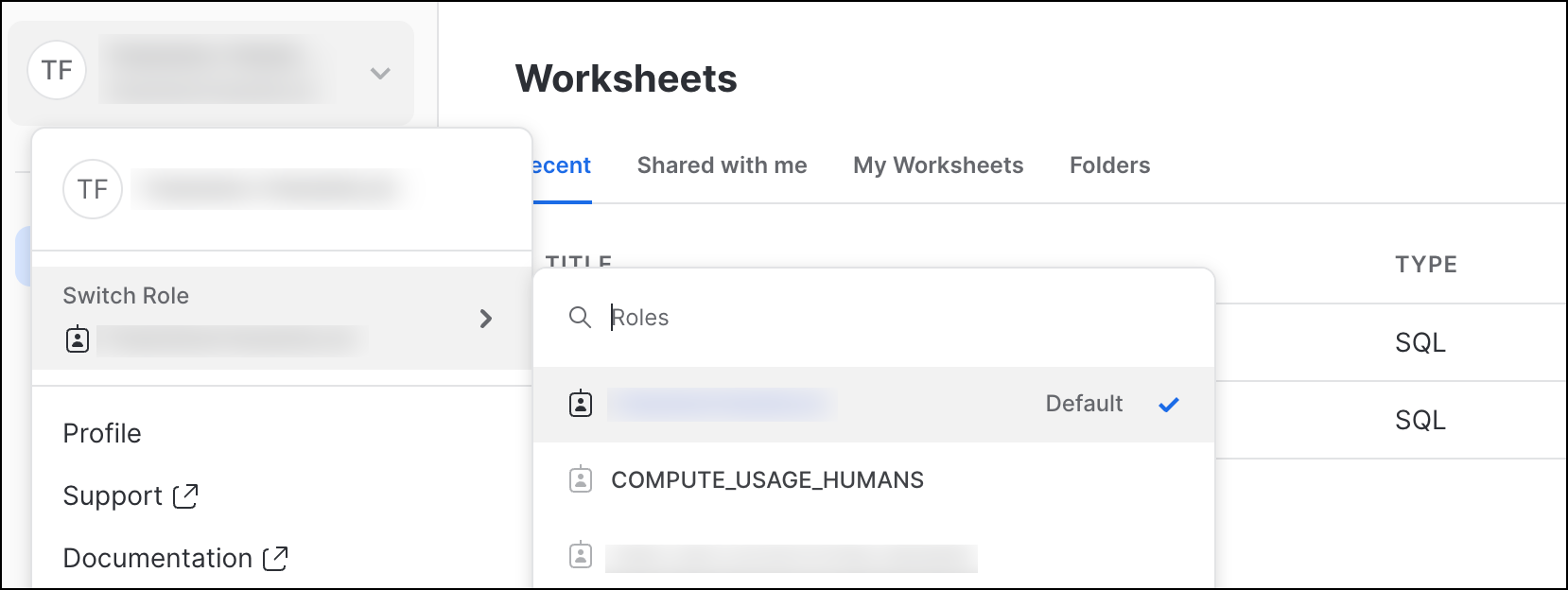

Click the user menu at the beginning of the left sidebar and copy the role you want Airflow to have in Snowflake. You can click Switch Role to see all the available roles.

-

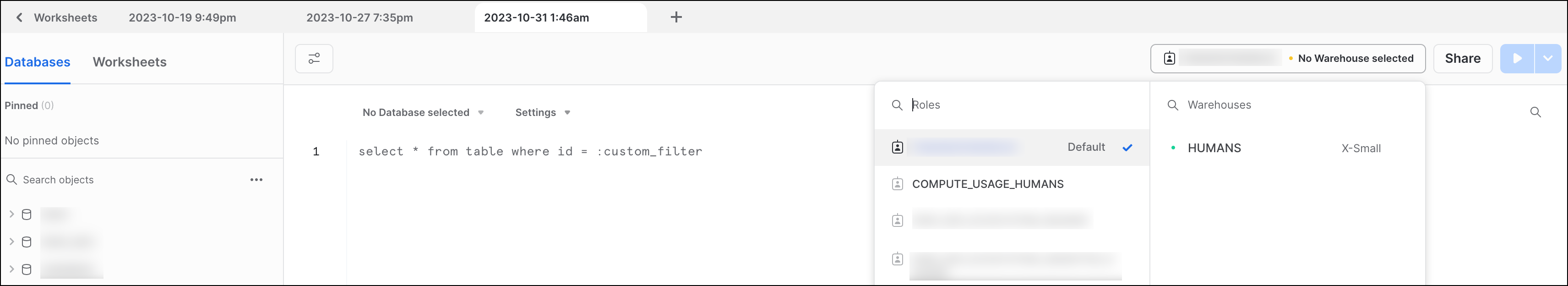

Copy the name of your Warehouse. To see all available warehouses, open a new Worksheet and open the context selector menu in the content pane.

- Open the Snowflake classic console and locate the URL for the page. The URL should be in the format

https://<account-identifier>.<region>.snowflakecomputing.com/. Copy<account-identifier>and<region>from the URL.

When you copy your region, you might have to additionally copy the cloud provider identifier after the region name for some GCP and some AWS regions. For example, if your account URL is https://ZS86751.europe-west4.gcp.snowflakecomputing.com, then your region will be europe-west4.gcp. See Account identifiers to learn more about Snowflake's account types and their identifiers.

-

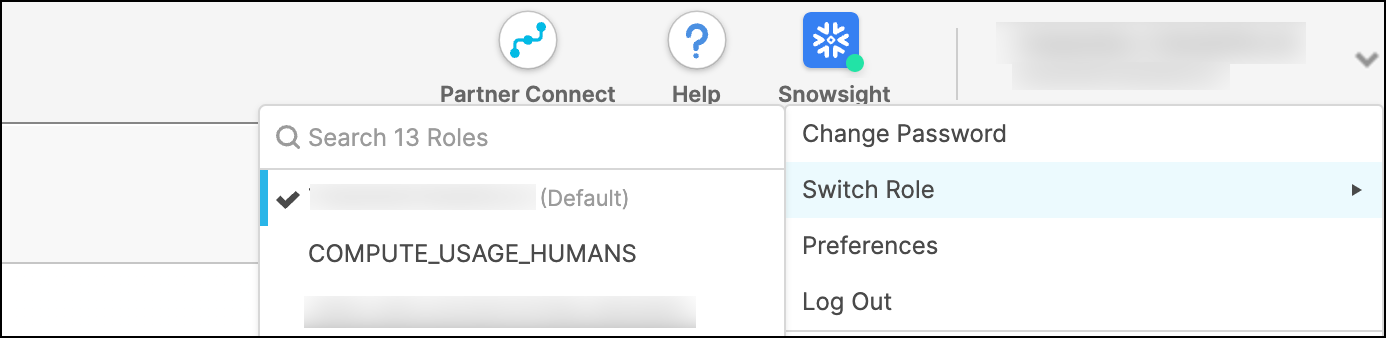

Click on your account name in the top right corner and hover over Switch Role to see a list of all available roles. Copy your Role.

-

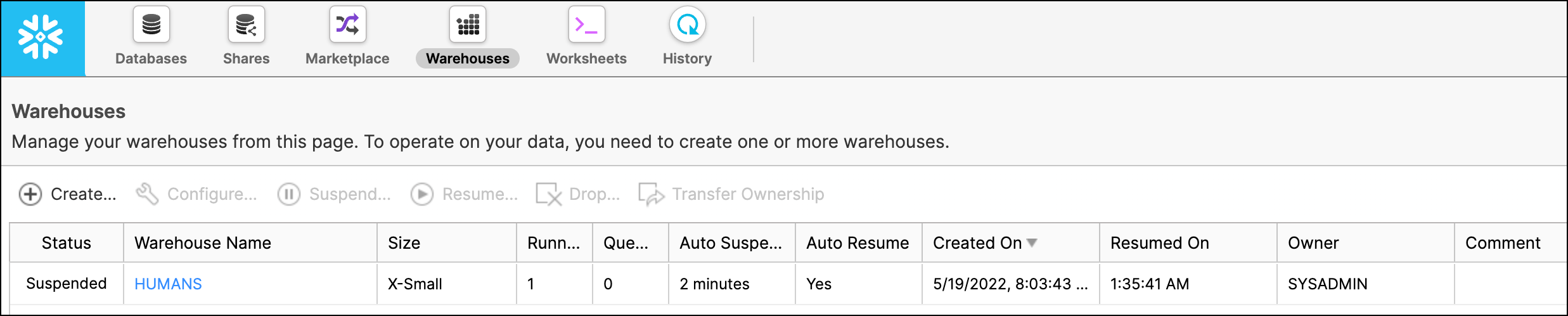

Copy your Warehouse from the Warehouses tab.

-

Copy the names for your Database and Schema.

-

In your terminal run the following command to generate a private RSA key using OpenSSL. Note that while there are other options to generate a key pair, Snowflake has specific requirements for the key format and may not accept keys generated with other tools. Make sure to write down the key passphrase as you will need it later.

openssl genrsa 2048 | openssl pkcs8 -topk8 -inform PEM -out rsa_key.p8 -

Generate the associated public key using the following command:

openssl rsa -in rsa_key.p8 -pubout -out rsa_key.pub -

In the Snowflake UI, create a new user that Airflow can use to access Snowflake. Copy the username and password.

-

Add the public key to the user you created in Snowflake. In the Snowflake UI, run the following command. You can paste the public key directly from the

rsa_key.pubfile without needing to modify it.ALTER USER <your user> SET RSA_PUBLIC_KEY='<your public key>'; -

Run the following script to format the private key to replace all newlines with

\n.def format_private_key(private_key_path):

with open(private_key_path, 'r') as key_file:

private_key = key_file.read()

return private_key.replace('\n', '\\n')

formatted_key = format_private_key('rsa_key.pem')

print(formatted_key)

Password authentication

When using password instead of a private key authentication, all connection parameters stay the same with the following changes:

login: Your Snowflake login username (typically your email address).password: Your Snowflake login password.private_key_content: Remove this field from the connection JSON.

Note that this method will not work when using 2FA or MFA with Snowflake or with the SnowflakeSqlApiOperator.

Create your connection

Airflow connections can be created using multiple methods, such as environment variables, the Airflow UI or the Airflow CLI. The following example shows how to create a Snowflake connection using the Airflow UI.

For Astro customers, Astronomer recommends to take advantage of the Astro Environment Manager to store connections in an Astro-managed secrets backend. These connections can be shared across multiple deployed and local Airflow environments. See Manage Astro connections in branch-based deploy workflows. Note that when using the Astro Environment Manager for your Snowflake connection, you can directly paste the private key into the Private Key Content field in the UI without needing to modify it.

-

Open your Astro project and add the following line to your

requirements.txtfile:apache-airflow-providers-snowflake=>5.7.0This will install the Snowflake provider package, which makes the Snowflake connection type available in Airflow.

-

Run

astro dev restartto restart your local Airflow environment and apply your changes inrequirements.txt. -

In the Airflow UI for your local Airflow environment, go to Admin > Connections. Click + to add a new connection.

-

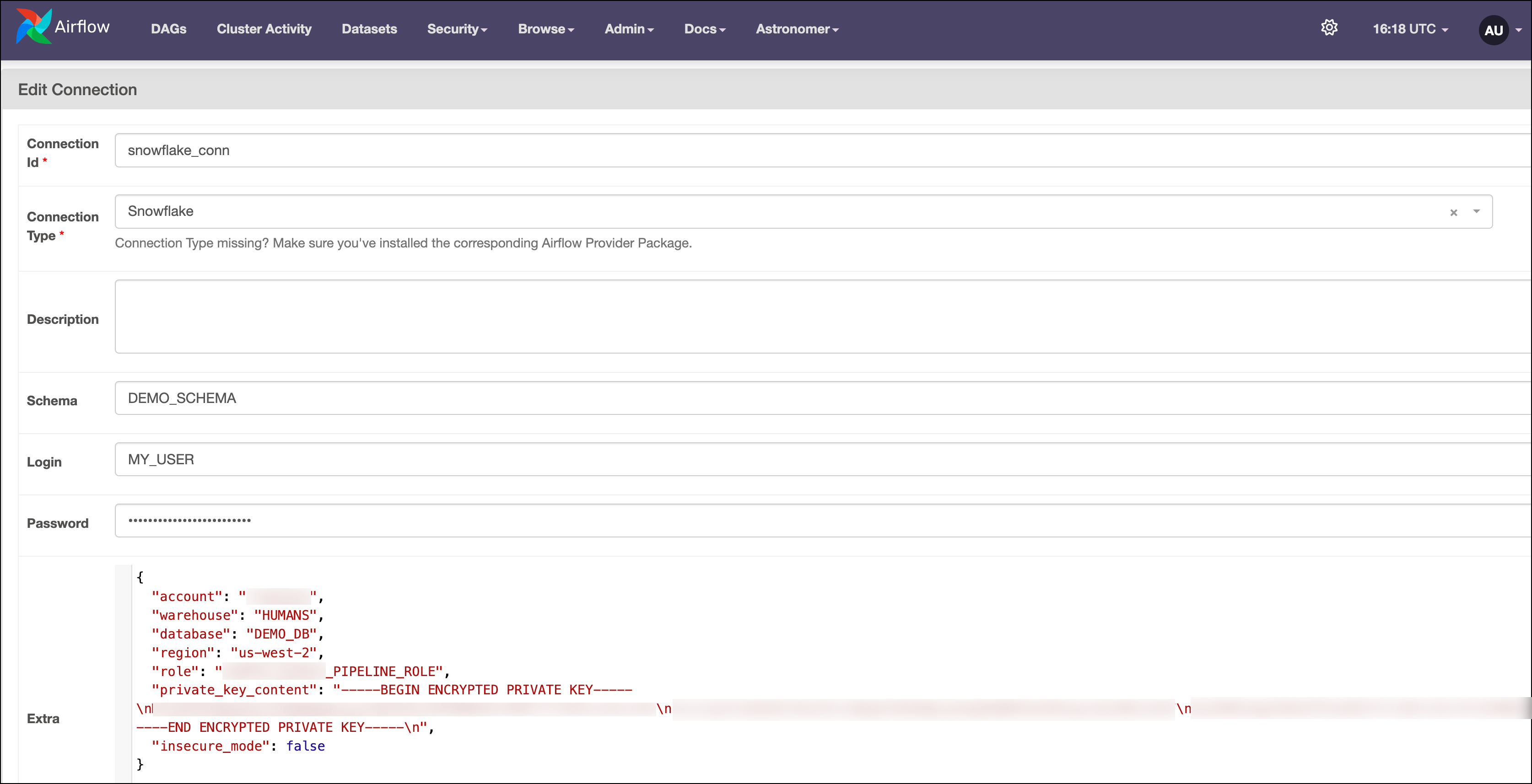

Fill out the following connection fields using the information you retrieved from Get connection details:

-

Connection Id: Enter a name for the connection.

-

Connection Type: Select

Snowflake. If you don't see this option, make sure you've added theapache-airflow-providers-snowflakeprovider package to yourrequirements.txtfile. -

Description: (Optional) Enter a description for the connection.

-

Schema: Enter your default schema.

-

Login: Enter your user. Make sure it's properly capitalized.

-

Password: Enter your private key passphrase.

-

Extra: Enter the following JSON in the extra field and replace the values with your Snowflake connection details. Add any optional parameters as needed.

{

"account": "<your account id in the form of abc12345>",

"warehouse": "<your warehouse>",

"database": "<your database>",

"region": "<your region>",

"role": "<your role in capitalized format>",

"private_key_content": "-----BEGIN ENCRYPTED PRIVATE KEY-----\nABC...ABC\nABC...ABC=\n-----END ENCRYPTED PRIVATE KEY-----\n"

}

Your connection should look something like the screenshot below.

-

How it works

Airflow uses the Snowflake connector Python package to connect to Snowflake through the SnowflakeHook.

The SnowflakeSqlApiOperator uses the Snowflake SQL API via the SnowflakeSqlApiHook.

See also

- Snowflake Airflow provider and the Snowflake Airflow provider package documentation

- Orchestrate Snowflake Queries with Airflow tutorial

- ELT with Snowflake and Apache Airflow® for eCommerce reference architecture

- Common SQL Airflow provider and the Common SQL Airflow provider package documentation

- Import and export Airflow connections using Astro CLI

- See how Snowflake pipelines can run on Astro