Create a BigQuery connection in Airflow

BigQuery is Google's fully managed and serverless data warehouse. Integrating BigQuery with Airflow lets you execute BigQuery jobs from a DAG.

There are multiple ways to connect Airflow and BigQuery, all of which require a GCP Service Account:

- Use the contents of a service account key file directly in an Airflow connection.

- Copy the service account key file to your Airflow project.

- Store the contents of a service account key file in a secrets backend.

- Use a Kubernetes service account to integrate Airflow and BigQuery. This is possible only if you run Airflow on Astro or Google Kubernetes Engine (GKE).

Using a Kubernetes service account is the most secure method because it doesn't require storing a secret in Airflow's metadata database, on disk, or in a secrets backend. The next most secure connection method is to store the contents of your service account key file in a secrets backend.

If you're an Astro user, Astronomer recommends using workload identity to authorize to your Deployments to BigQuery. This eliminates the need to specify secrets in your Airflow connections or copying credentials file to your Astro project. See Authorize Deployments to your cloud.

Prerequisites

- The Astro CLI.

- A locally running Astro project.

- A Google Cloud project with BigQuery API enabled.

- Permissions to create an IAM service account or use an existing one. See Google documentation.

Get connection details

A connection from Airflow to Google BigQuery requires the following information:

- Service account name

- Service account key file

- Google Cloud Project ID

Complete one of the following sets of steps to retrieve these values:

- Key file value

- Key file in container

- Key file in secrets backend

- Kubernetes service account

This method requires you to save the contents of your service account key file in your Airflow connection.

- In your Google Cloud console, select your Google Cloud project and copy its ID.

- Create a new service account.

- Grant roles to your service account so that it can access BigQuery. See BigQuery roles for a list of available roles and the permissions.

- Add a new JSON key file to the service account.

- Copy the contents of the key file.

This method requires you to mount your service account key file to your Airflow containers.

- In your Google Cloud console, select your Google Cloud project and copy its ID.

- Create a new service account.

- Grant roles to your service account so that it can access BigQuery. See BigQuery roles for a list of available roles and the permissions.

- Add a new JSON key file to the service account.

- Download the key file.

You can save your service account key file to any secrets backend. See Configure a secrets backend for steps on how to configure several popular secrets backend services to use with Airflow on Astro. For example, if you use Google Secret Manager as a secrets backend:

- In the Google Cloud console, select your Google Cloud project and copy its ID.

- Create a new service account.

- Grant roles to your service account so that it can access BigQuery. See BigQuery roles for a list of available roles and the permissions.

- Add a new JSON key file to the service account.

- Download the key file.

- Create a secret in Google Secret Manager and upload the key file from Step 5 as a secret value. Then, copy the ID of your secret name.

- Follow Astronomer's documentation to configure secrets backend for your Astro project.

You can now use this secret in your Airflow connections.

A Kubernetes service account provides an identity to the processes running in a Pod. The process running inside a Pod can use this identity of its associated service account to authenticate cluster's API server. This is also referred to as Workload Identity in GCP and Azure.

This method cannot be used in a local Airflow environment. It is available to use with Airflow on Astro or OSS Airflow running on Kubernetes clusters.

If you're running Airflow in a GKE cluster, complete the following steps:

- In your Google Cloud console, open the Google Cloud project where you're running BigQuery and copy its ID.

- Enable Workload Identity and configure Airflow to use workload identity. Copy the name for the Kubernetes service account that you create.

- Go to IAM, then click Service Accounts and search for your Kubernetes service account. If you don't see your service account, click + ADD to add your service account to your Google Cloud project.

- Grant roles to your service account to access BigQuery. See BigQuery roles for a list of available roles and the permissions.

After you complete these steps, any Google Cloud connection you create in the Deployment will use your workload identity by default to access BigQuery.

Create your connection

- Key file value

- Key file in container

- Key file in secrets backend

- Kubernetes service account

-

Open your Astro project and add the following line to your

requirements.txtfile:apache-airflow-providers-googleThis installs the Google Cloud provider package, which makes the Google Cloud connection type available in Airflow.

-

Run

astro dev restartto restart your local Airflow environment and apply your changes inrequirements.txt. -

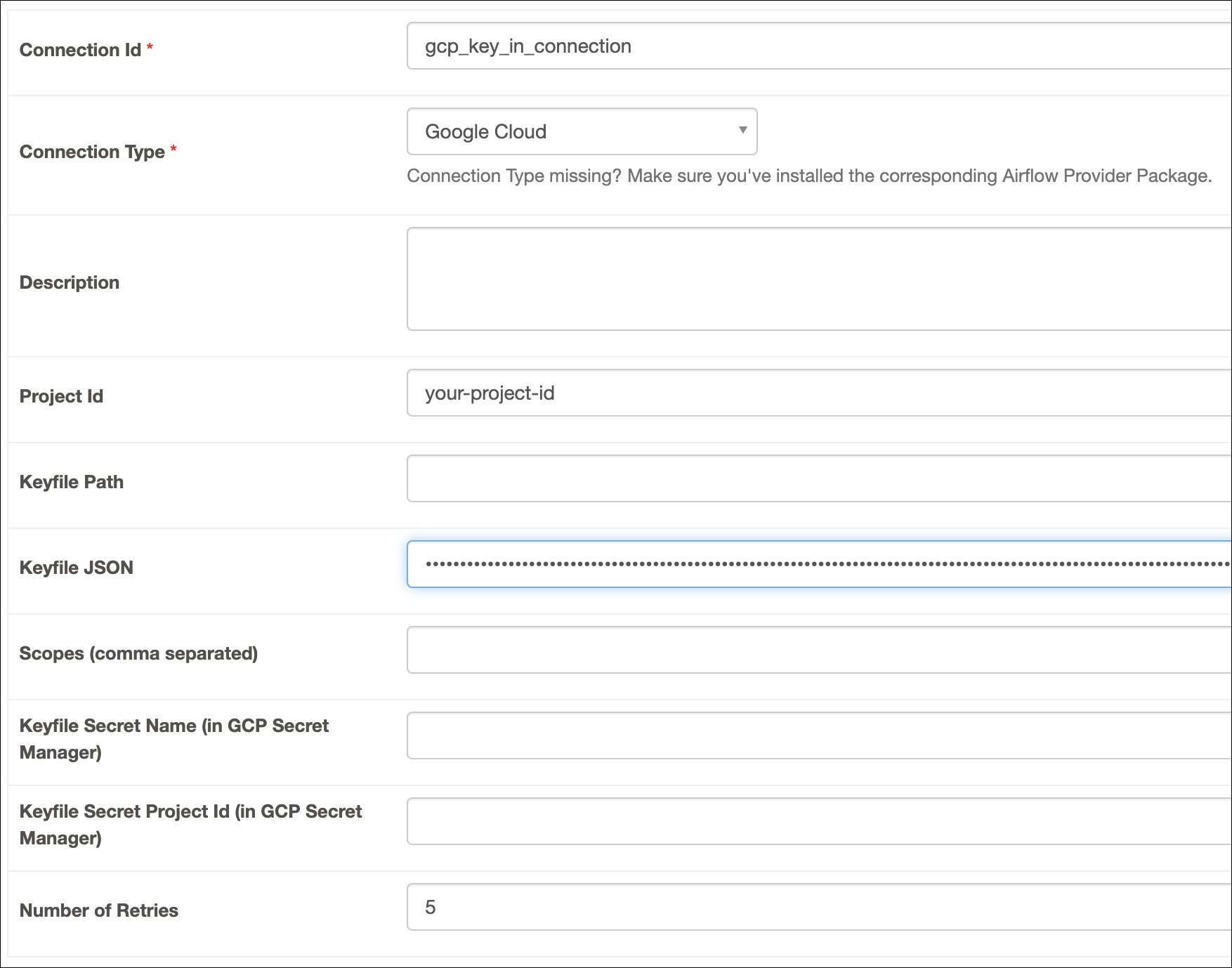

In the Airflow UI for your local Airflow environment, go to Admin > Connections. Click + to add a new connection, then choose the Google Cloud connection type.

-

Fill out the following connection fields using the information you retrieved from Get connection details:

- Connection Id: Enter a name for the connection.

- Keyfile JSON: Enter the contents of the key file.

-

Click Test. After the connection test succeeds, click Save.

-

Open your Astro project and add the following line to your

requirements.txtfile:apache-airflow-providers-googleThis installs the Google Cloud provider package, which makes the Google Cloud connection type available in Airflow.

-

Add the key file to your

includefolder. This will make it available to Airflow at/usr/local/airflow/include/<your-key-file>.json. -

Restart or start your local Airflow using

astro dev restartto apply your changes inrequirements.txt. -

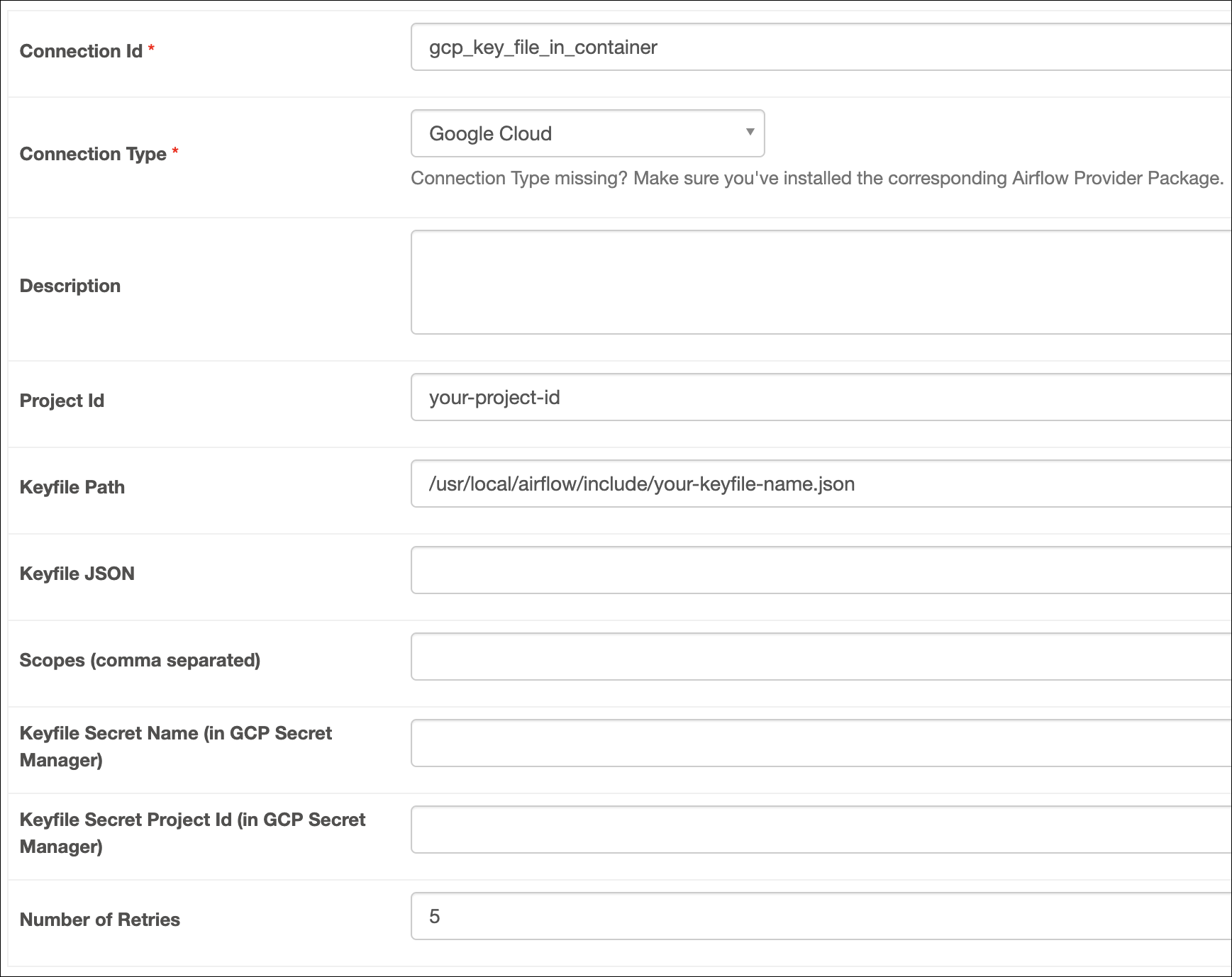

In the Airflow UI for your local Airflow environment, go to Admin > Connections. Click + to add a new connection, then choose the Google Cloud connection type.

-

Fill out the following connection fields using the information you retrieved from Get connection details:

- Connection Id: Enter a name for the connection.

- Keyfile Path: Enter the path of your key file.

-

Click Test connection. After the connection test succeeds, click Save.

-

Open your Astro project and add the following line to your

requirements.txtfile:apache-airflow-providers-googleThis will install the Google Cloud provider package, which makes the Google Cloud connection type available in Airflow.

-

Run

astro dev restartto restart your local Airflow environment and apply your changes inrequirements.txt. -

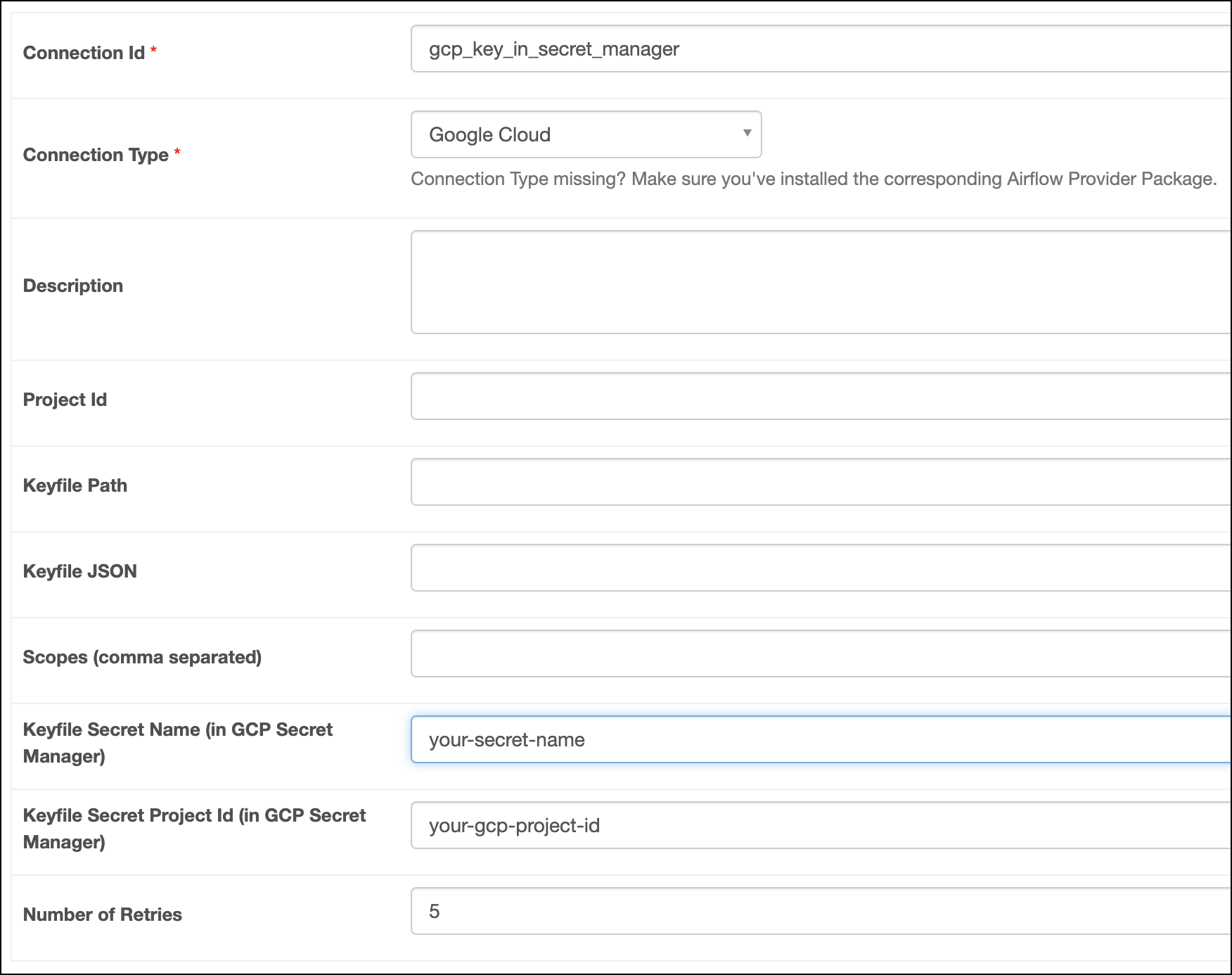

In the Airflow UI for your local Airflow environment, go to Admin > Connections. Click + to add a new connection, then choose the Google Cloud connection type.

-

Fill out the following connection fields using the information you retrieved from Get connection details:

- Connection Id: Enter a name for the connection.

- Keyfile Secret Project Id: Enter the ID of the Google Cloud project.

- Keyfile Secret Name: Enter the ID of your secret name.

-

Click Test connection. After the connection test succeeds, click Save.

-

Open your Airflow project and add the following line to your

requirements.txtfile:apache-airflow-providers-googleThis will install the Google Cloud provider package, which makes the Google Cloud connection type available in Airflow.

-

Run

astro dev restartto restart your local Airflow environment and apply your changes inrequirements.txt. -

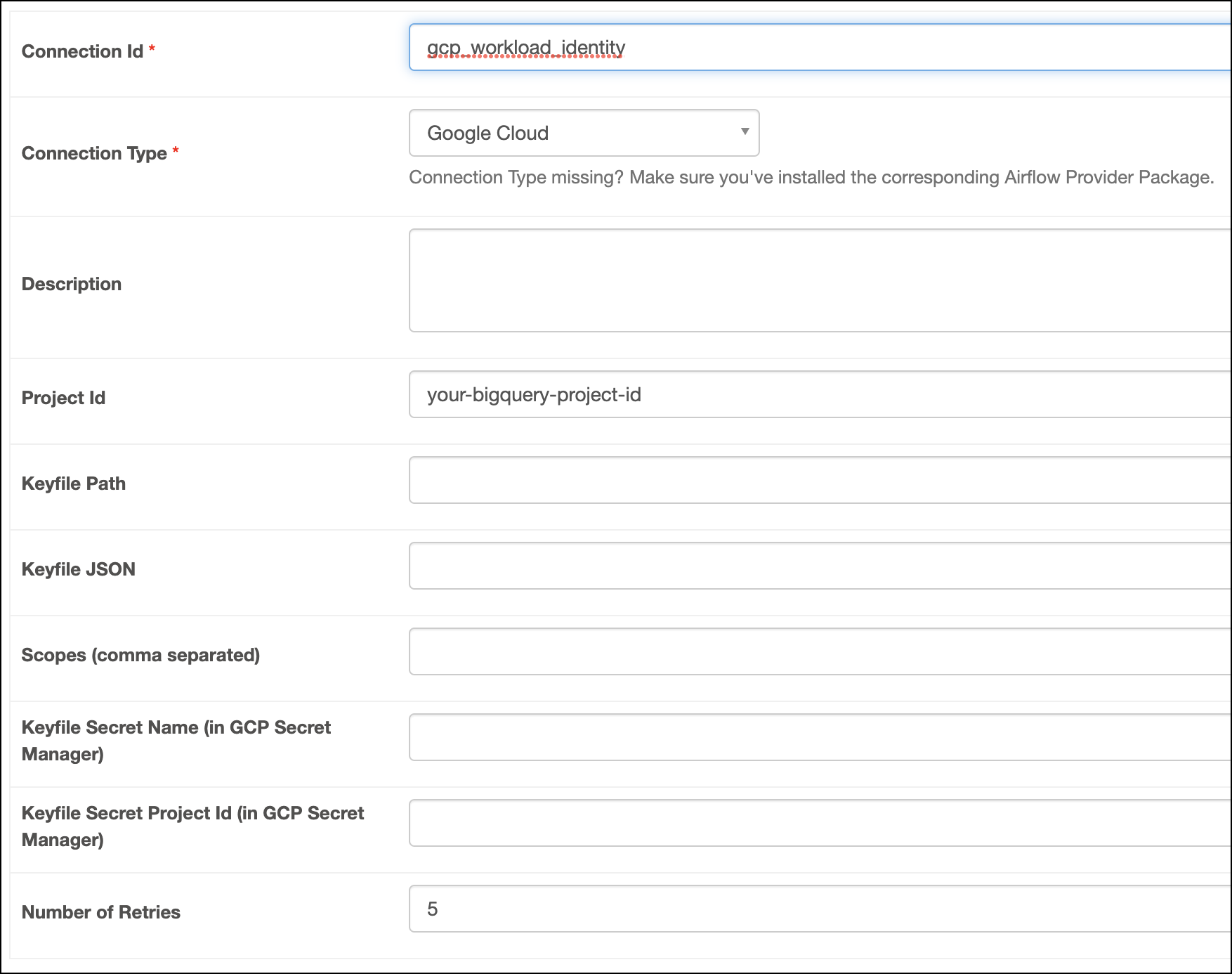

In your Airflow UI, go to Admin > Connections. Click the + sign to add a new connection, select the connection type as Google Cloud.

-

Fill out the following connection fields using the information you retrieved from Get connection details:

- Connection Id: Enter a name for the connection.

- Project Id: Enter the ID of the Google Cloud project.

-

Click Test connection. After the connection test succeeds, click Save.

How it works

Airflow uses the python-bigquery library to connect to GCP BigQuery through the BigQueryHook. If you don't define specific key credentials in the connection, Google defaults to using Application Default Credentials (ADC). This means when you use Workload Identity to connect to BigQuery, Airflow relies on ADC to authenticate.