Create an Azure Data Factory connection in Airflow

Azure Data Factory (ADF) is a cloud-based data integration and transformation service used to build data pipelines. Integrating ADF with Airflow allows you to run ADF pipelines and check their status from an Airflow DAG.

This guide provides the basic setup for creating an ADF connection. For a complete integration tutorial, see Run Azure Data Factory pipelines in Airflow.

Prerequisites

- The Astro CLI

- A locally running Astro project

- Permissions to access your data factory

- A Microsoft Entra ID application

Get connection details

A connection from Airflow to Azure Data Factory requires the following information:

- Subscription ID

- Data factory name

- Resource group name

- Application Client ID

- Tenant ID

- Client secret

Complete the following steps to retrieve all of these values:

- In your Azure portal, open your data factory service and select the subscription that contains your data factory.

- Copy the Name of your data factory and the Resource group.

- Click on the subscription for your data factory, then copy the Subscription ID from the subscription window.

- Open your Microsoft Entra ID application. Then, from the Overview tab, copy the Application (client) ID and Directory (tenant) ID.

- Create a new client secret for your application to be used in the Airflow connection. Copy the VALUE of the client secret that appears.

- Assign the Data Factory Contributor role to your app so that Airflow can access the data factory.

Create your connection

-

Open your Astro project and add the following line to your

requirements.txtfile:apache-airflow-providers-microsoft-azureThis will install the Azure provider package, which makes the Azure Data Factory connection type available in Airflow.

-

Run

astro dev restartto restart your local Airflow environment and apply your changes inrequirements.txt. -

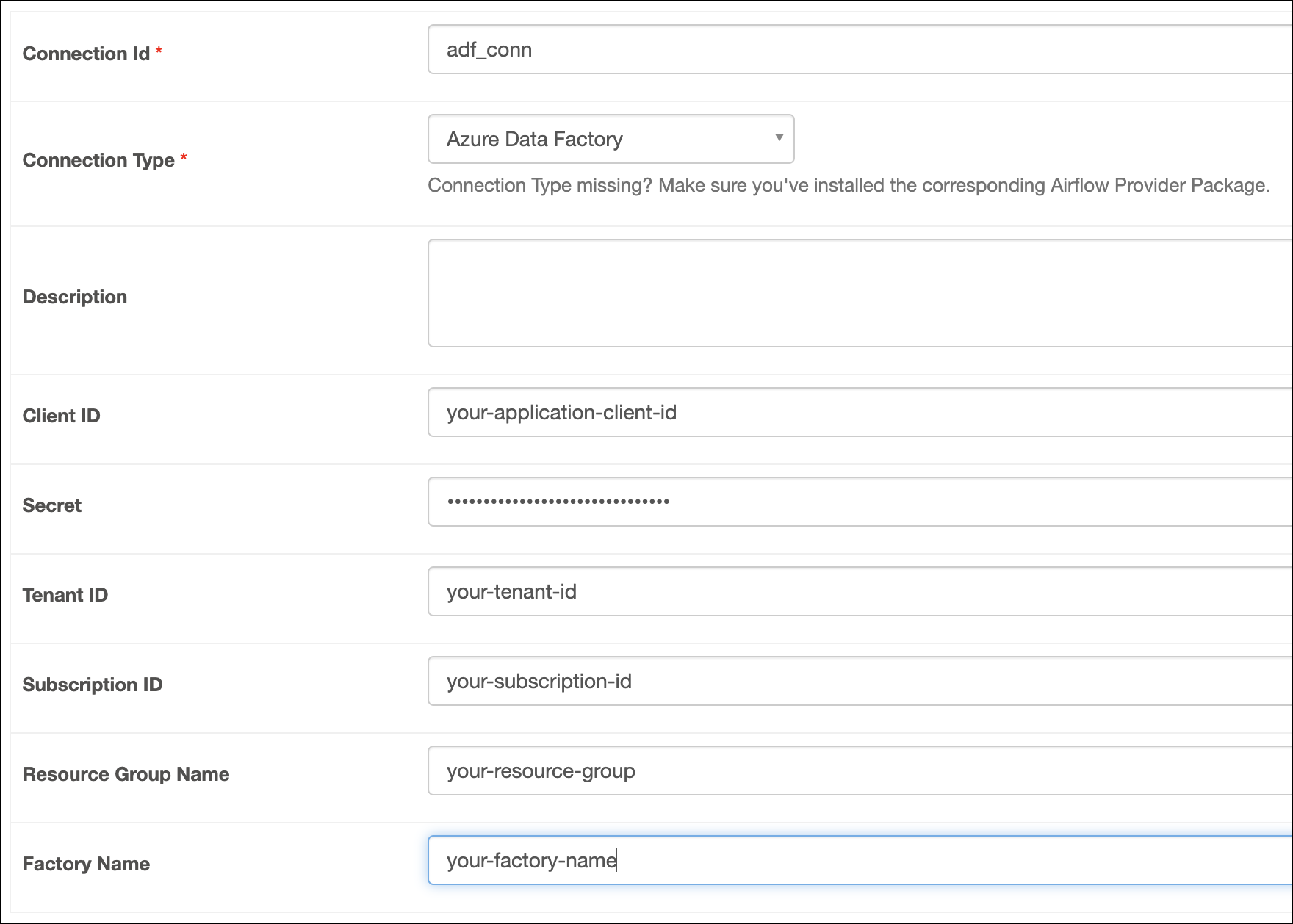

In the Airflow UI for your local Airflow environment, go to Admin > Connections. Click + to add a new connection, then choose Azure Data Factory as the connection type.

-

Fill out the following connection fields using the information you retrieved from Get connection details:

- Connection Id: Enter a name for the connection.

- Client ID: Enter the Application (client) ID.

- Secret: Enter the client secret VALUE.

- Tenant ID: Enter the Directory (tenant) ID.

- Subscription ID: Enter the Subscription ID.

- Resource Group Name: Enter your data factory Resource group.

- Factory Name: Enter your data factory Name.

-

Click Test. After the connection test succeeds, click Save.

To use the same connection for multiple data factories or multiple resource groups, skip the Resource Group and Factory Name fields in the connection configuration. Instead, you can pass these values to the default_args of a DAG or as parameters to the AzureDataFactoryOperator. For example:

"azure_data_factory_conn_id": "adf",

"factory_name": "my-factory",

"resource_group_name": "my-resource-group",

How it works

Airflow uses the azure-mgmt-datafactory library from Azure SDK for Python to connect to Azure Data Factory using AzureDataFactoryHook.