View metrics for Astro Deployments

The Astro UI exposes a suite of observability metrics that show real-time data related to the performance and health of your Deployments. These metrics are a useful reference as you troubleshoot issues and can inform how you allocate resources. They can also help you estimate the cost of your Deployments. This document explains each available metric and where to find them.

To view information about individual DAGs, see View DAG runs. To track Deployment health and specific incidents, see Deployment health and incidents.

Deployment analytics

The Analytics page contains a suite of metrics for a given Deployment. This page includes metrics that give you insight into the performance of both your data pipelines and infrastructure. Because metrics are collected in real time, you can use this page to detect irregularities in your pipelines or infrastructure as they happen.

To view metrics for a Deployment, open the Deployment in the Astro UI, click Analytics. The following topics contain information about each available metric.

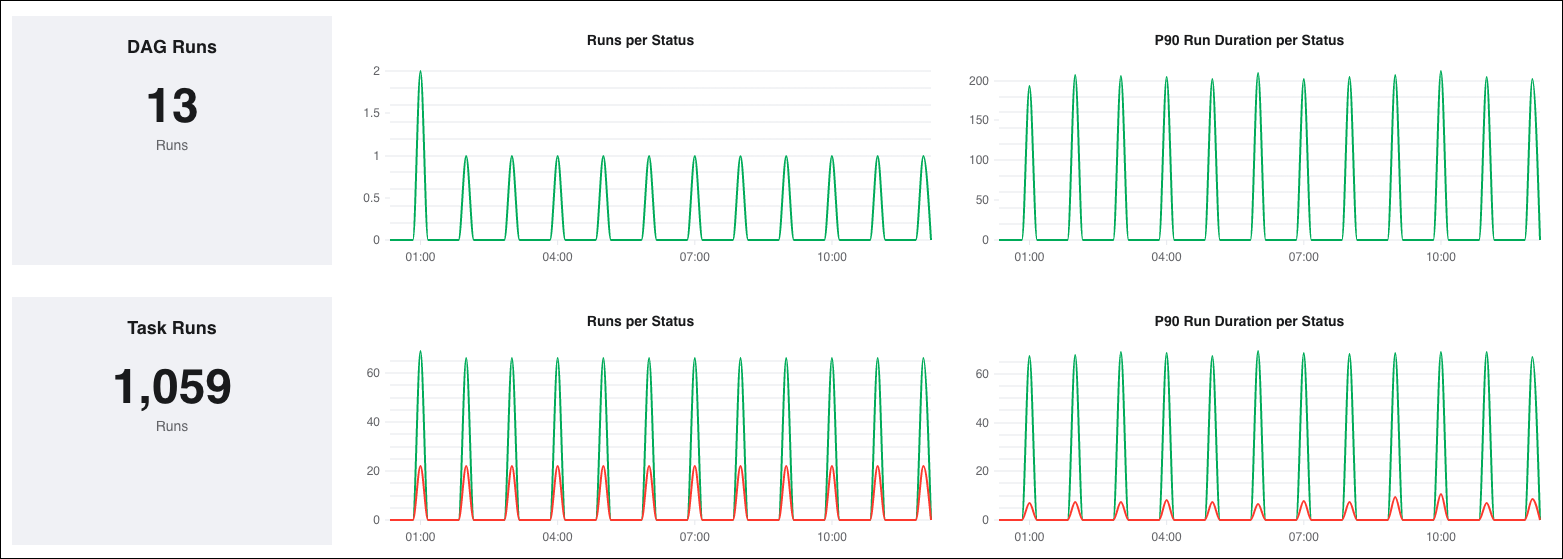

DAG and task runs

These metrics contain information about your Deployment's DAG runs and task runs over a given period of time.

Available metrics

-

DAG/ Task Runs: This metric graphs the total number of DAG/ task runs.

-

Runs per Status: This metric graphs the number of failed and successful DAG/ task runs, plotted based on the DAG/ task run start time. Use this metric to see exactly when recent DAG/ task runs succeeded or failed.

warningThe DAG runs metric does not record DAG run timeouts as failed runs. To see timed out DAG runs, you must go into the Airflow UI to check on the statuses of each DAG run there.

-

P90 Run Duration per Status: This metric graphs the 90th percentile of execution times for DAG/ task runs, plotted based on the DAG/ task run start time. In the example above, the P90 Run Duration per Status for successful DAG/ task runs at 5:00 was 34 seconds, which means that 90% of those DAG/ task runs finished in 34 seconds or less.

This metric can both help you understand how your pipelines are performing overall, as well as identify DAG/ task runs that didn't result in a failure but still took longer to run than expected.

-

Ephemeral storage usage (Kubernetes Executor/KubernetesPodOperator): View how your Kubernetes tasks use your available ephemeral storage as a metric of the percentage used of total ephemeral storage configured. Click on Dynamic y-axis scaling to adjust the graph's y-axis to better fit your data or zoom in to view details.

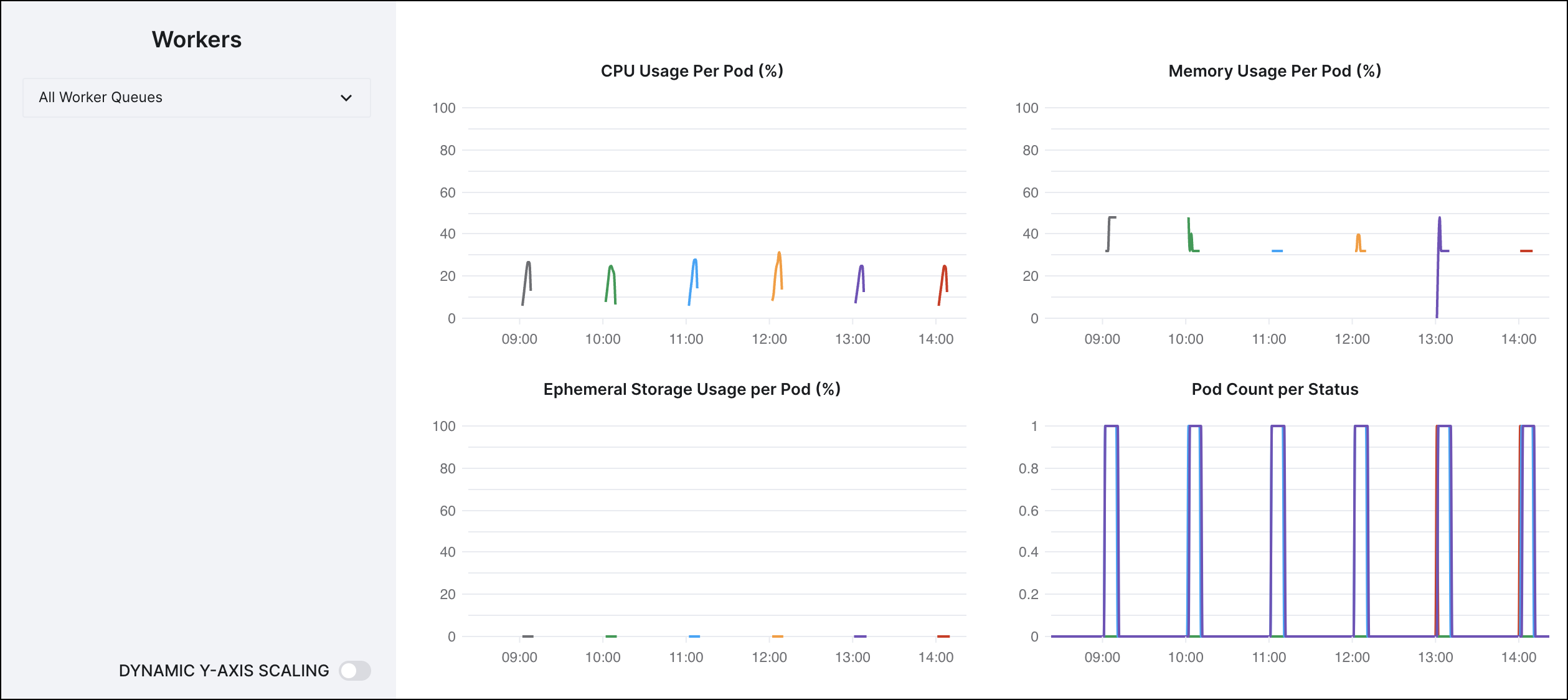

Airflow workers

These metrics contain infrastructure use information about your workers. Unique worker instances appear on these charts as different colored lines.

Hover over the graph to view a graph legend. If a given worker queue spins a worker down and back up again within a given interval, the newly spun up worker appears as a new color on the graph.

Available metrics

-

CPU Usage Per Pod (%): This metric graphs the peak CPU usage for all workers as a percentage of your maximum CPU capacity. Different worker Pods appear as differently colored lines on this chart. Hover over a given interval to view the specific number of CPUs being used.

-

Memory Usage Per Pod (MB): This metric graphs the peak memory usage for all workers as a percentage of your maximum memory capacity. Different worker Pods will appear as differently colored lines on this chart. This metric should be at or below 50% of your total allowed memory at any given time.

infoThe number of workers per Deployment autoscales based on a combination of worker concurrency and the number of

runningandqueuedtasks. This means that the total available CPU and memory for a single Deployment may change at any time. -

Network Usage Per Pod (MB): This metric graphs each worker/ scheduler Pod's peak network usage over time. Sudden, irregular spikes in this metric should be investigated as a possible error in your project code.

-

Pod Count per Status: This metric graphs the number of worker and scheduler Pods in a given Kubernetes container state. Because Astro operates on a one-container-per-pod model, the state of the container state is also the Pod state. Use this metric to understand how many workers and schedulers are currently running in your Deployment. This can help you determine the values for Worker Count (Min-Max) that best fit your resource use.

Maximum Worker Count applies only to workers in the

Runningstate. This means that the number of worker Pods in Pod Count per Status might be greater than Maximum Worker Count at times if Astro scales down these workers at the same time that it creates new workers. For example, let's say that a Deployment has a Maximum Worker Count of 20. If you have five workers running tasks for an hour and you deploy code that requires 20 workers, Astro will trigger a scale-down event for the existing five workers and create 20 new workers to run tasks according to your new code. This means that your Deployment temporarily runs 25 workers.If a pod is stuck in a

Waitingstate, it can indicate that your Deployment did not successfully pull and run your Runtime image. For more information about container states, read the Kubernetes documentation. -

Ephemeral storage usage (Celery workers): View how your Celery worker uses your available ephemeral storage as a metric of the percentage used of total ephemeral storage configured. Click on Dynamic y-axis scaling to adjust the graph's y-axis to better fit your data or zoom in to view details.

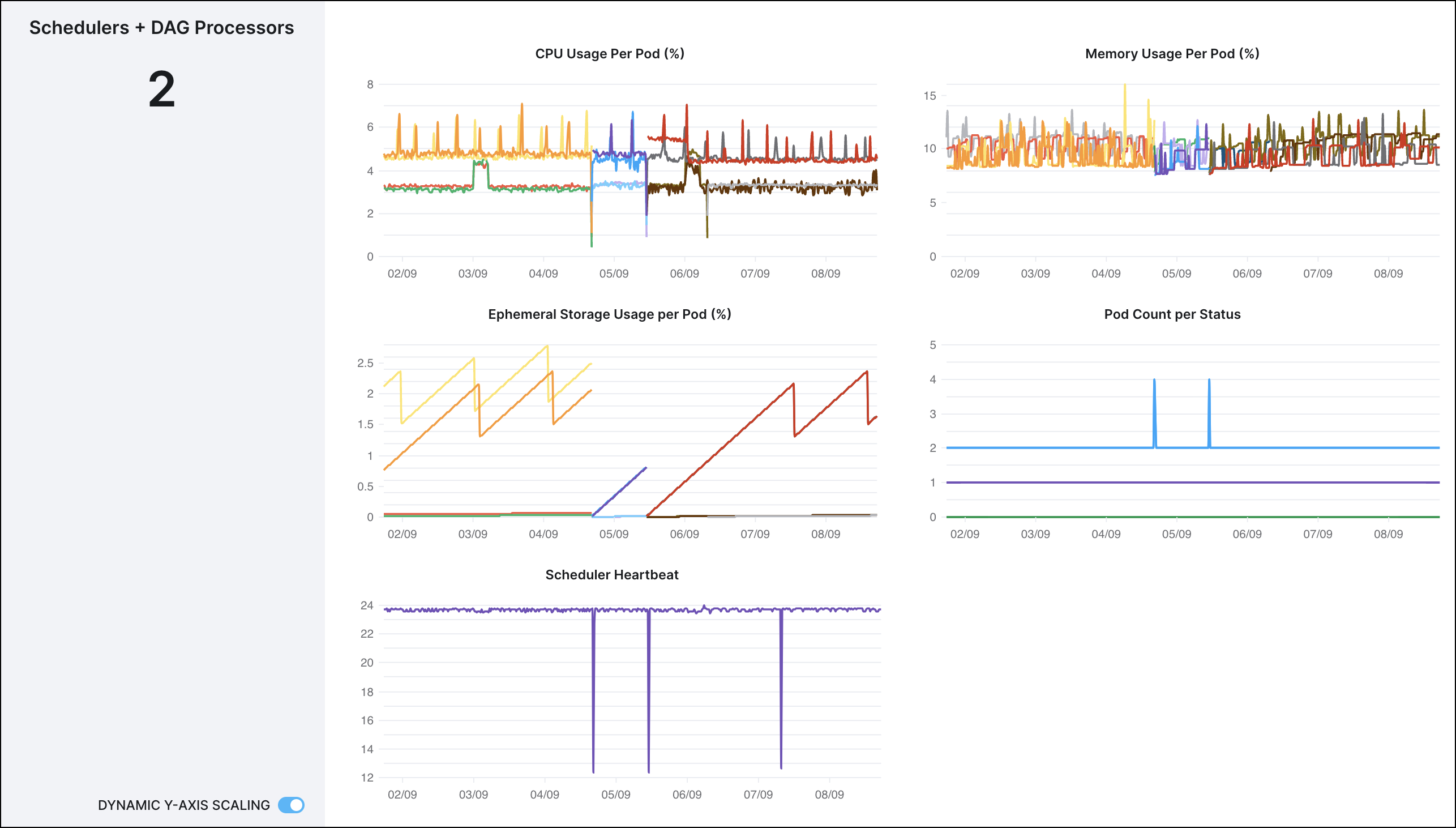

Airflow scheduler and DAG processor

These metrics contain infrastructure use information about your scheduler resources. Unique scheduler instances appear on these charts as different colored lines.

Hover over the graph to view a graph legend. If a given Deployment spins a scheduler down and back up again within a given interval, the newly spun up scheduler appears as a new color on the graph. If your Deployment includes a separate DAG processor, that resource also has its own line and appears in the legend.

-

CPU Usage Per Pod (%): This metric graphs the peak CPU usage for all schedulers as a percentage of your maximum CPU capacity. Different scheduler and DAG processor Pods appear as differently colored lines on this chart. Hover over a given interval to view the specific number of CPUs being used.

-

Memory Usage Per Pod (MB): This metric graphs the peak memory usage for all schedulers as a percentage of your maximum memory capacity. Different scheduler Pods appear as differently colored lines on this chart. This metric should be at or below 50% of your total allowed memory at any given time. For scheduler metrics, the maximum allowable memory for each scheduler Pod appears as a dotted red line.

-

Network Usage Per Pod (MB): This metric graphs each scheduler Pod's peak network usage over time. Sudden, irregular spikes in this metric should be investigated as a possible error in your project code.

-

Pod Count per Status: This metric graphs the number of scheduler Pods in a given Kubernetes container state. Because Astro operates on a one-container-per-pod model, the state of the container is also the Pod state. Use this metric to understand how many schedulers and DAG processors are currently running in your Deployment.

If a Pod is stuck in a

Waitingstate, it can indicate that your Deployment did not successfully pull and run your Runtime image. For more information about container states, read the Kubernetes documentation. -

Ephemeral storage usage per Pod: View how your schedulers and DAG processors uses the available ephemeral storage as a metric of the percentage used of total ephemeral storage configured. Click on Dynamic y-axis scaling to adjust the graph's y-axis to better fit your data or zoom in to view details.

-

Scheduler Heartbeat: A scheduler emits a heartbeat at a regular rate to signal that it's healthy to other Airflow components. This metric graphs a scheduler's average heartbeats per minute over a given time.

On average, a scheduler should emit ~11-12 heartbeats per minute. A scheduler is considered "unhealthy" if it has not emitted a heartbeat for over 1 minute. The lack of a scheduler heartbeat is expected during a code push, but erratic restarts or an "Unhealthy" state that persists for a significant amount of time is worth investigating further.

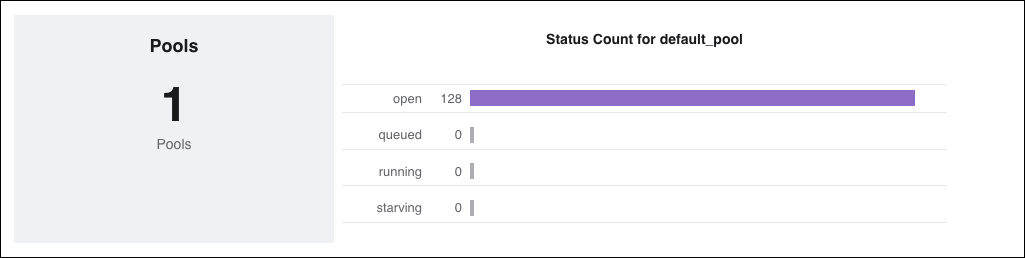

Pools

These metrics contain information about your Deployment's configured Airflow pools. They can give you insight into how your DAGs are handling concurrency.

Available metrics

-

Status Count for

<pool-name>: This metric graphs both the number of open slots in your pool and the number of tasks in each pool state:- Open: The number of available slots in the pool

- Queued: The number of task instances which are occupying a pool slot and waiting to be picked up by a worker

- Running: The number of tasks instances which are occupying a pool slot and running

- Starving: The number of tasks that can't be scheduled when there are 0 available pool slots

A large number of starving tasks could indicate that you should reconfigure your pools to run more tasks in parallel.

Deployment overview

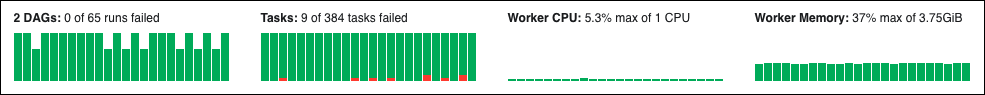

Each Deployment includes four high-level performance charts about the default worker queue which you can view from both the Deployments menu and a Deployment's Overview page. They include:

- DAG runs

- Task Instances

- Worker CPU

- Worker Memory

The data in these four charts is recorded hourly and is displayed in both UTC and your local browser timezone. Each bar across all graphs covers a complete hour while the entire time window for a single graph is 24 hours. For example, a single bar might represent 16:00 to 17:00 while the entire time window of the graph might represent Nov 1 16:00 to Nov 2 16:00.

The data for the most recent hour is for the hour to date. For example, if you are looking at this page at 16:30, then the bar for the 16:00-17:00 hour interval would show data for 16:00-16:30.

These charts serve as high-level reports for your default worker queue that you can investigate further.

The following sections describe each of the 4 available charts.

Total DAG runs

The DAG Runs metric records successful and failed DAG runs over hour-long intervals. A DAG run is defined as an instantiation of a DAG at a specific point in time.

You can hover over each bar to see the corresponding hour interval displayed in both UTC and your local timezone. Below that, you can see the number of successful DAG runs and the number of failed DAG runs. If a bar is partially or fully red, it means that one or more DAG runs failed within that hour interval.

The bolded value above the graph denotes the total number of DAG runs that have been executed in the last 24 hours.

Task instances

The Tasks chart records successful and failed task instances over hour-long intervals. A task instance is defined as an instantiation of a task at a specific point in time.

You can hover over each bar to see the corresponding hour interval displayed in both UTC and your local timezone. Below that, you can see the number of successful and failed task instances. If a bar is partially or fully red, it means that one or more task instances failed within that hour interval.

The bolded value above the graph denotes the total number of tasks that have run in the last 24 hours.

Resource usage

The Worker CPU and Worker Memory charts in the Astro UI provide visibility into the resources being consumed by the workers in your Deployment as measured by CPU and memory consumption.

Worker CPU records the peak CPU usage, while Worker Memory records the peak memory usage by worker nodes over hour-long intervals. The bolded values above each graph show the maximum CPU/ memory usage by a single worker at any point in time over the last 24 hours.

A known issue with Celery might cause worker memory allocation to increase without a corresponding increase in task count or DAG memory use. To correct this issue, redeploy your code without making any changes to restart the Celery worker and reset memory requests. See Deploy code to Astro. This issue should not affect your tasks or cause OutOfMemory (OOM) errors. If you experience complications, contact Astronomer Support.