Set up Astro alerts

Astro alerts provide an additional level of observability to Airflow's notification systems. You can configure an alert to notify you in Slack, PagerDuty, or through email when a DAG completes, if you have a DAG run failure, or if a task duration exceeds a specified time. You can also define whether alerts apply to a specific Deployment or across an entire Workspace or Organization.

Unlike Airflow callbacks and SLAs, Astro alerts require no changes to DAG code. Follow this guide to set up your Slack, PagerDuty, or email to receive alerts from Astro and then configure your Deployment to send alerts.

To configure Airflow notifications, see Airflow email notifications and Manage Airflow DAG notifications.

Alert types

Each Astro alert has a notification channel and a trigger type. The notification channel determines the format and destination of an alert and the trigger type defines what causes the alert trigger.

DAG and task alerts

You can trigger an alert to a notification channel using one of the following trigger types:

- DAG failure: The alert triggers whenever the specified DAG fails.

- DAG success: The alert triggers whenever the specified DAG completes

- Task duration: The alert triggers when a specified task takes longer than expected to complete.

- Timeliness: The alert triggers when a given DAG does not have a successful DAG run within a defined time window.

You can only set a task duration trigger for an individual task. Alerting on task group duration is not supported.

Timeliness alerts only support Standard Time, as opposed to Daylight Saving Time. If you want Local Time support (for example, for time zone aware DAGs), you must adjust the alerts's UTC time when the time changes from Standard Time to Daylight Saving Time or from Daylight Saving Time to Standard Time.

Deployment health alerts

Deployment health alerts are customizable Astro Alerts that notify you about Deployment health incidents and suggest specific remediation actions. You can use these alerts to proactively monitor when Deployment health issues arise. For example, you can create an alert for when the Airflow metadata database storage is unusually high. All available Deployment health alerts are enabled by default for new Deployments and can be individually created, deleted, and edited in the Alerts tab of existing Deployments. If you do not want Deployment health alerts for a new Deployment, disable Deployment Health Alerts in the Advanced section.

Astro enables the following Deployment health alerts by default for new Deployments. You can individually create and customize these alerts for any Deployment:

- Airflow Database Storage Unusually High: The alert triggers when the metadata database has tables that are larger than 50GiB (Info) or 75GiB (Warning).

- Deprecated Runtime Version: The alert triggers when your Deployment is using a deprecated Astro Runtime version.

- Job Scheduling Disabled: The alert triggers when the Airflow scheduler is configured to prevent automatic scheduling of new tasks using DAG schedules.

- Worker Queue at Capacity: The alert triggers when at least one worker queue in this Deployment is running the maximum number of tasks and workers.

Notification channels

You can send Astro alerts, including Deployment Health Alerts, to the following notification channels

- Slack

- PagerDuty

- (Private Preview) DAG trigger

This feature is in Private Preview. Please reach out to your customer success manager to enable this feature.

The DAG Trigger notification channel works differently from other notification channel types. Instead of sending a pre-formatted alert message, Astro makes a generic request through the Airflow REST API to trigger a DAG on Astro. You can configure the triggered DAG to complete any action, such as sending a message to your own incident management system or writing data about an incident to a table.

Deployment health alert notifications

For new Deployments, Astro creates the default Deployment health alerts with Email as the notification channel Type. By default, these alerts notify the Contact Emails of the Deployment, specified in the Advanced section of the Deployment creation page.

If Contact Emails is empty, Astro displays the fallback email(s) that receive alert notifications for the Deployment in the Advanced section of Deployment configuration. To change this notification channel, ensure the Contact Emails field of your Deployment is not blank by editing Deployment Details.

Scope

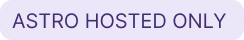

When you create an alert or notification channel, you can define whether it is available to a specific Deployment or available to an entire Workspace or Organization.

The type of scope you can use for your alerts or notification channels depends on your user permissions.

Alerts scope

The most limited scope available to any alert or notification channel is for a single Deployment. This means that other Deployments in the same Workspace or Organization can't automatically access these options for configuring new or existing alerts and notification channels. Use this for Deployment-specific alerts, like alerts on Deployment health, or Deployment-specific channels, like the email address of a Deployment owner. The following alert types must be defined at the Deployment level:

- DAG alerts (DAG Failure, DAG Success, DAG Timeliness)

- Deployment health alerts

- Task alerts (Task Duration, Task Failure)

If you have Workspace Author permissions or higher, you can create Alerts for specific Deployments, but must make them through the Alerts page in your Deployment settings page.

Notification channels scope

When you create a notification channel, you can define whether it is available to a specific Deployment or available to an entire Workspace or Organization. The type of scope you can use for your notification channels depends on your user permissions.

You can view, create, and manage notification channels for your Workspace or Organization in the Notification channels page or when creating a Deployment alert.

Prerequisites

- An Astro project.

- An Astro Deployment. Your Deployment must run Astro Runtime 7.1.0 or later to configure Astro alerts, and it must also have OpenLineage enabled.

- A Slack workspace, PagerDuty service, or email address.

Astro alerts requires OpenLineage. By default, every Astro Deployment has OpenLineage enabled. If you disabled OpenLineage in your Deployment, you need to enable it to use Astro alerts. See Disable OpenLineage to find how to disable and re-enable OpenLineage.

Step 1: Configure your notification channel

- Slack

- PagerDuty

- DAG Trigger

To set up alerts in Slack, you need to create a Slack app in your Slack workspace. After you've created your app, you can generate a webhook URL in Slack where Astro will send Astro alerts.

-

Go to Slack API: Applications to create a new app in your organization's Slack workspace.

-

Click From scratch when prompted to choose how you want to create your app.

-

Enter a name for your app, like

astro-alerts, choose the Slack workspace where you want Astro to send your alerts, and then click Create App.

If you do not have permission to install apps into your Slack workspace, you can still create the app, but you will need to request that an administrator from your team completes the installation.

-

Select Incoming webhooks.

-

On the Incoming webhooks page, click the toggle to turn on Activate Incoming Webhooks. See Sending messages using Incoming Webhooks.

-

In the Webhook URLs for your Workspace section, click Add new Webhook to Workspace.

If you do not have permission to install apps in your Slack workspace, click Request to Add New Webhook to send a request to your organization administrator.

-

Choose the channel where you want to send your Astro alerts and click Allow.

-

After your webhook is created, copy the webhook URL from the new entry in the Webhook URLs for your Workspace table.

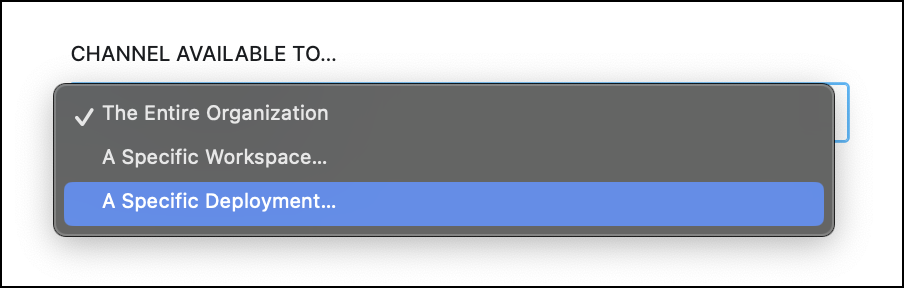

To set up an alert integration with PagerDuty, you need access to your organization's PagerDuty Service. PagerDuty uses the Events API v2 to create a new integration that connects your Service with Astro.

- Open your PagerDuty service and click the Integrations tab.

-

Click Add an integration.

-

Select Events API v2 as the Integration Type.

-

On your Integrations page, open your new integration and enter an Integration Name.

-

Copy the Integration Key for your new Astro alert integration.

No external configuration is required for the email integration. Astronomer recommends allowlisting astronomer.io with your email provider to ensure that no alerts go to your spam folder. Alerts are sent from no-reply@astronomer.io.

The DAG Trigger notification channel works differently from other notification channel types. Instead of sending a pre-formatted alert message, Astro makes a generic request through the DagRuns endpoint of the Airflow REST API to trigger any DAG in your Workspace. You can configure the triggered DAG to complete any action, such as sending a message to your own incident management system or writing data about an incident to a table.

The following parameters are used to pass metadata about the alert in the API call:

conf: This parameter holds the alert payload:alertId: A unique alert ID.alertType: The type of alert triggered.dagName: The name of the DAG that triggered the alert.message: The detailed message with the cause of the alert.note: By default, this isTriggering DAG on Airflow <url>.

The following is an example alert payload that would be passed through the API:

{

"dagName": "fail_dag",

"alertType": "PIPELINE_FAILURE",

"alertId": "d75e7517-88cc-4bab-b40f-660dd79df216",

"message": "[Astro Alerts] Pipeline failure detected on DAG fail_dag. \\nStart time: 2023-11-17 17:32:54 UTC. \\nFailed at: 2023-11-17 17:40:10 UTC. \\nAlert notification time: 2023-11-17 17:40:10 UTC. \\nClick link to investigate in Astro UI: https://cloud.astronomer.io/clkya6zgv000401k8zafabcde/dags/clncyz42l6957401bvfuxn8zyxw/fail_dag/c6fbe201-a3f1-39ad-9c5c-817cbf99d123?utm_source=alert\"\\n"

}

These parameters are accessible in the triggered DAG using DAG params.

- Create a DAG that you want to run when the alert is triggered. For example, you can use the following DAG to run arbitrary Python code when the alert is triggered:

import datetime

from typing import Any

from airflow.models.dag import DAG

from airflow.operators.python import PythonOperator

with DAG(

dag_id="register_incident",

start_date=datetime.datetime(2023, 1, 1),

schedule=None,

):

def _register_incident(params: dict[str, Any]):

failed_dag = params["dagName"]

print(f"Register an incident in my system for DAG {failed_dag}.")

PythonOperator(task_id="register_incident", python_callable=_register_incident)

-

Deploy the DAG to any Deployment in the Workspace where you want to create the alert. The DAG that triggers the alert and the DAG that the alert runs can be in different Deployments, but they must be deployed in the same Workspace.

-

Create a Deployment API token for the Deployment where you deployed the DAG that the alert will run. Copy the token to use in the next step.

Step 2: Add a notification channel.

You can enable alerts and add notification channels in the Astro UI.

-

In the Astro UI, click Alerting > Notification channels.

-

Click Add Notification Channel.

-

Enter a name for your notification channel.

-

Choose the Channel Type.

-

Add the notification channel information.

- Slack

- PagerDuty

- DAG Trigger

Paste the Webhook URL from your Slack workspace app. If you need to find a URL for an app you've already created, go to your Slack Apps page, select your app, and then choose the Incoming Webhooks page.

Paste the Integration Key from your PagerDuty Integration and select the Severity of the alert.

Enter the email addresses that should receive the alert.

Select the Deployment where your DAG is deployed, then select the DAG. Enter the Deployment API token that you created in Step 1.

-

Choose the scope by defining what you want to make Notification channel available to:

-

Click Create notification channel.

Step 3: Create your alert in the Astro UI

- In the Astro UI, click Alerting > Alerts.

Deployment-specific alerts

If you do not have permissions to add a notification channel or alert at the your Workspace or Organization-level, you can add one in the Alerts form through the Deployment settings page.

- In the Astro UI, click Deployments then select your Deployment.

- Click the Alerts tab.

-

Click Add Alert.

-

Choose the Alert Type

-

DAG failure: Send an alert if a DAG fails.

-

DAG success: Send an alert when a DAG completes.

-

Task duration: Enter the Duration for how long a task should take to run before you send an alert to your notification channels.

-

Timeliness: Select the Days of Week that the alert should observe, the Verification Time when it should look for a DAG success, and the Lookback Period for how long it should look back for a verification time. For example, if an alert has a Verification Time of 3:00 PM UTC and a Lookback Period of 60 minutes, it will trigger whenever the given DAG does not produce a successful DAG run from 2:00 to 3:00 PM UTC.

warningTimeliness alerts only support Standard Time, as opposed to Daylight Saving Time. If you want Local Time support (for example, for time zone aware DAGs), you must adjust the alerts's UTC time when the time changes from Standard Time to Daylight Saving Time or from Daylight Saving Time to Standard Time.

-

-

Choose the alert Severity, either Info, Warning, Critical, or Error.

-

Define the conditions on which you want your alert to send a notification.

- Define the Workspace and Deployment

- Select the Attribute, Operator, and DAGs to define when you want Astro to send an Alert and which DAGs that you want the alert to apply to.

Step 3: (Optional) Change an alert name

After you select a DAG that you want to apply an alert to, Astro automatically generates a name for your alert. However, you can choose to change the name of your alert.

-

Expand the Change alert names... section.

-

Edit the Alert Name.

-

Click Create Alert to save your changes.

(Optional) Test your DAG failure alert

Astro alerts work whether your DAG run is manual or scheduled, so you can test your configured Astro alerts by failing your DAG manually.

-

In the Astro UI, click DAGs.

-

Choose the DAG that has your alert configured.

-

Trigger a DAG run.

-

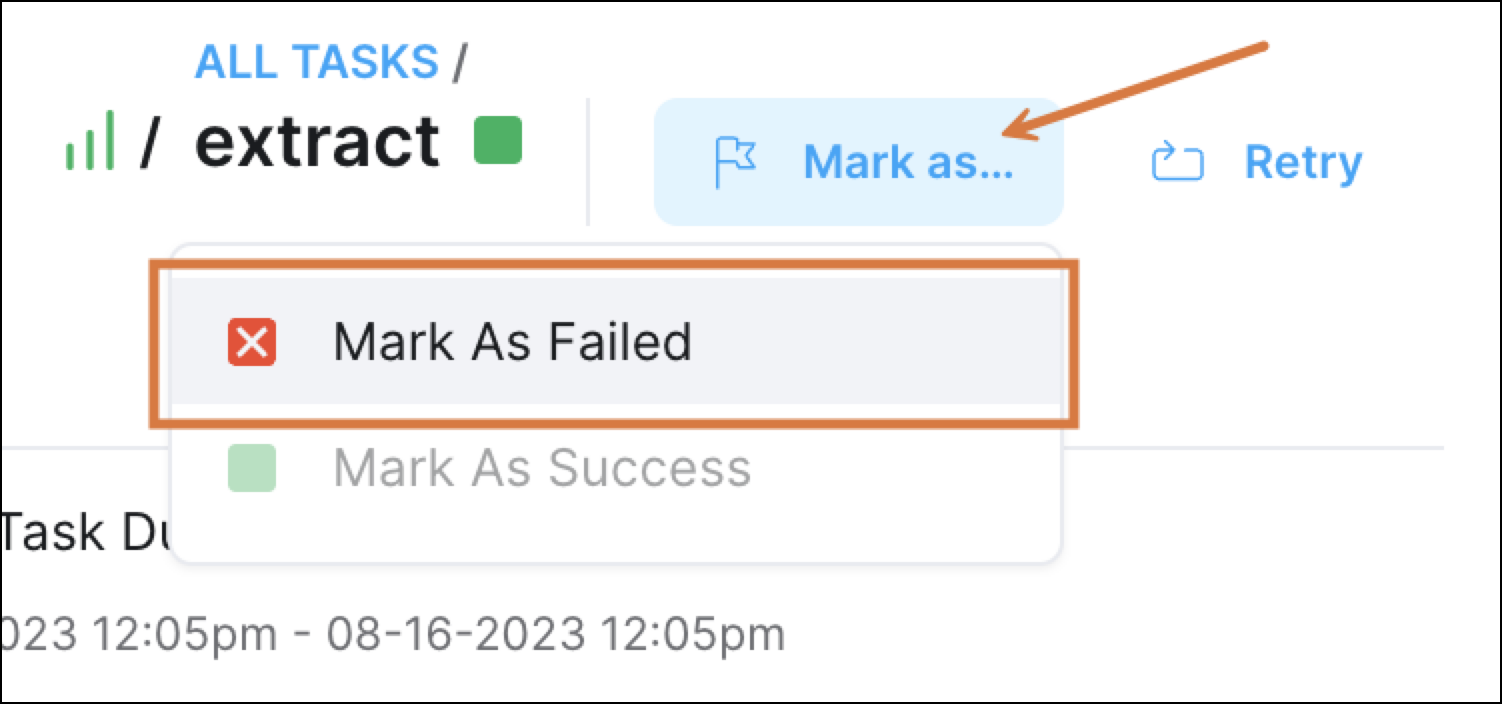

Select Mark as and choose Failed to trigger an alert for a DAG failure.

-

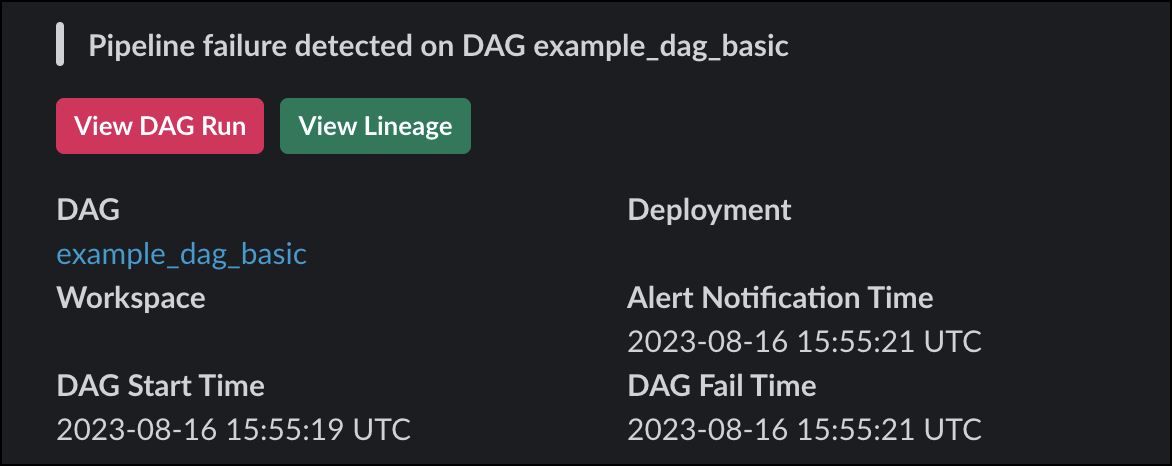

Check your Slack, PagerDuty, or Email alerts for your DAG failure alert. The alert includes information about the DAG, Workspace, Deployment, and data lineage associated with the failure as well as direct links to the Astro UI.