Migrate to Astro from Google Cloud Composer

This is where you'll find instructions for migrating an Airflow environment from Google Cloud Composer (GCC) to Astro.

To complete the migration process, you will:

- Prepare your source Airflow and set up your Astro Airflow environment.

- Migrate metadata from your source Airflow environment.

- Migrate DAGs and additional Airflow components from your source Airflow environment.

- Complete the cutover process to Astro.

Prerequisites

Before starting the migration, ensure that the following are true:

- You have an Astro user account and can log in to Astro.

- (Optional) You created a network connection from Astro to your external cloud resources..

- (Optional) You configured your identity provider (IdP).

On your local machine, make sure you have:

- An Astro account.

- The Astro CLI.

On the cloud service from which you're migrating, ensure that you have:

- A source Airflow environment on Airflow 2 or later.

- Read access to the source Airflow environment.

- Read access to any cloud storage buckets that store your DAGs.

- Read access to any source control tool that hosts your current Airflow code, such as GitHub.

- Permission to create new repositories on your source control tool.

- (Optional) Access to your secrets backend.

- (Optional) Permission to create new CI/CD pipelines.

All source Airflow environments on 1.x need to be upgraded to at least Airflow 2.0 before you can migrate them. Astronomer professional services can help you with the upgrade process.

If you're migrating to Astro from OSS Airflow or another Astronomer product, and you currently use an older version of Airflow, you can still create Deployments with the corresponding version of Astro Runtime even if it is deprecated according to the Astro Runtime maintenance policy. This allows you to migrate your DAGs to Astro without needing to make any code changes and then immediately upgrade to a new version of Airflow. Note that after you migrate your DAGs, Astronomer recommends upgrading to a supported version of Astro Runtime as soon as you can.

You can additionally use the gcloud CLI to expedite some steps in this guide.

Step 1: Install Astronomer Starship

The Astronomer Starship migration utility connects your source Airflow environment to your Astro Deployment and migrates your Airflow connections, Airflow variables, environment variables, and DAGs.

The Starship migration utility works as a plugin with a user interface, or as an Airflow operator if you are migrating from a more restricted Airflow environment.

See the following table for information on which versions of Starship are available depending on your source Airflow environment:

| Source Airflow environment | Starship plugin | Starship operator |

|---|---|---|

| Airflow 1.x | ❌ | ❌ |

| Cloud Composer 1 - Airflow 2.x | ✔️️ | |

| Cloud Composer 2 - Airflow 2.x | ✔️️ |

To install the Starship plugin on your Cloud Composer 1 or Cloud Composer 2 instance, install the astronomer-starship package in your source Airflow environment. See Install packages from PyPI

You can alternatively complete this installation with the gcloud CLI by running the following command:

gcloud composer environments update [GCC_ENVIRONMENT_NAME] \

--location [LOCATION] \

--update-pypi-package=astronomer-starship

Step 2: Create an Astro Workspace

In your Astro Organization, you can create Workspaces, which are a collection of users that have access to the same Deployments. Workspaces are typically owned by a single team.

You can choose to use an existing Workspace, or create a new one. However, you must have at least one Workspace to complete your migration.

-

Follow the steps in Manage Workspaces to create a Workspace in the Astro UI for your migrated Airflow environments. Astronomer recommends naming your first Workspace after your data team or initial business use case with Airflow. You can update these names in the Astro UI after you finish the migration.

-

Follow the steps in Manage Astro users to add users from your team to the Workspace. See Astro user permissions for details about each available Workspace user role.

You can add users to a Workspace an Organization using the Astro CLI. See:

You can also automate adding batches of users to Astro with shell scripts. See Add a group of users to Astro using the Astro CLI.

Step 3: Create an Astro Deployment

A Deployment is an Astro Runtime environment that is powered by the core components of Apache Airflow. In a Deployment, you can deploy and run DAGs, configure worker resources, and view metrics.

You can choose to use an existing Deployment, or create a new one. However, you must have at least one Deployment to complete your migration.

Before you create your Deployment, copy the following information from your source Airflow environment:

- Environment name

- Airflow version

- Environment class or size

- Number of schedulers

- Minimum number of workers

- Maximum number of workers

- Execution role permissions

- Airflow configurations

- Environment variables

- Option 1: Astro UI

- Option 2: Astro CLI & Deployments-as-Code

This setup varies slightly for Astro Hybrid users. See Deployment settings for all configurations related to Astro Hybrid Deployments.

-

In the Astro UI, select a Workspace.

-

On the Deployments page, click Deployment.

-

Complete the following fields:

- Name: Enter the name of your source Airflow environment.

- Astro Runtime: Select the Runtime version that's based on the Airflow version in your source Airflow environment. See the following table to determine which version of Runtime to use. Where exact version matches are not available, the nearest Runtime version is provided with its supported Airflow version in parentheses.

Airflow Version Runtime Version 2.0 3.0.4 (Airflow 2.1.1)¹ 2.2 4.2.9 (Airflow 2.2.5) 2.4 6.3.0 (Airflow 2.4.3)

¹The earliest available Airflow version on Astro Runtime is 2.1.1. There are no known risks for upgrading directly from Airflow 2.0 to Airflow 2.1.1 during migration. For a complete list of supported Airflow versions, see Astro Runtime release and lifecycle schedule.

- Description: (Optional) Enter a description for your Deployment.

- Cluster: Choose whether you want to run your Deployment in a Standard cluster or Dedicated cluster. If you don't have specific networking or cloud requirements, Astronomer recommends using the default Standard cluster configurations.

To configure and use dedicated clusters, see Create a dedicated cluster. If you don't have the option of choosing between standard or dedicated, that means you are an Astro Hybrid user and must choose a cluster that has been configured for your Organization. See Manage Hybrid clusters.

-

Executor: Choose the same executor as in your source Airflow environment.

-

Scheduler: Use the following table to determine the Deployment size you need based on the size of your source Airflow environment.

Environment size Scheduler size vCPU Memory Ephemeral Storage Small (Up to ~50 DAGs) Small 1 2Gi 5 GiB Medium (Up to ~250 DAGs) Medium Scheduler: 1

DAG Processor: 1Scheduler: 2 GiB

DAG Processor: 2 GiB²5 GiB Large (Up to ~1000 DAGs) Large Scheduler: 1

DAG Processor: 3Scheduler: 2 GiB

DAG Processor: 6 GiB²5 GiB Extra Large (Up to ~2000 DAGs) Extra-large Scheduler: 1

DAG Processor (x2): 3.5Scheduler: 4 GiB

DAG Processor (x2): 6 GiB²5 GiB

²Some of the following recommendations for CPU and memory might be less than what you currently allocate to Airflow components in your source environment. If you notice significant performance differences or your Deployment on Astro parses DAGs more slowly than your source Airflow environment, adjust your resource use on Astro. See Configure Deployment resources

- Worker Type: Select the worker type for your default worker queue. See Worker queues.

- Min / Max # Workers: Set the same minimum and maximum worker count as in source Airflow environment.

- KPO Pods: (Optional) If you use the KubernetesPodOperator or Kubernetes Executor, set limits on how many resources your tasks can request.

- Click Create Deployment.

- Specify any system-level environment variables as Astro environment variables. See Environment variables.

- Set an email to receive alerts from Astronomer support about your Deployments. See Configure Deployment contact emails.

This option is available only on Astro Hybrid.

-

On your local machine, create a directory with the name of the source Airflow environment. In this directory, create a file called

config.yaml. -

Open

config.yamland add the following:deployment:

environment_variables:

- is_secret: <true-or-false>

key: <variable-name>

value: <variable-value>

configuration:

name: <deployment-name>

description: <deployment-description>

runtime_version: <runtime-version>

dag_deploy_enabled: false

scheduler_au: <scheduler-au>

scheduler_count: <scheduler-count>

cluster_name: <cluster-name>

workspace_name: <workspace-name>

worker_queues:

- name: default

max_worker_count: <max-worker-count>

min_worker_count: <min-worker-count>

worker_concurrency: 16

worker_type: <worker-type>

alert_emails:

- <alert-email> -

Replace the placeholder values in the configuration:

<true-or-false>/<variable-name>/<variable-value>: Set system-level environment variables for your Deployment and specify whether they should be secret. Repeat this configuration in the file for any additional variables you need to set.<deployment-name>: Enter the same name as your source Airflow environment.<deployment-description>: (Optional) Enter a description for your Deployment.<runtime-version>: Select the Runtime version that's based on the Airflow version in your source Airflow environment. See the following table to determine which version of Runtime to use. Where exact version matches are not available, the nearest Runtime version is provided with its supported Airflow version in parentheses.

Airflow Version Runtime Version 2.0 3.0.4 (Airflow 2.1.1)¹ 2.2 4.2.9 (Airflow 2.2.5) 2.4 6.3.0 (Airflow 2.4.3)

¹The earliest available Airflow version on Astro Runtime is 2.1.1. There are no known risks for upgrading directly from Airflow 2.0 to Airflow 2.1.1 during migration. For a complete list of supported Airflow versions, see Astro Runtime release and lifecycle schedule.

<scheduler-au>: Set your scheduler size in Astronomer Units (AU). An AU is a unit of CPU and memory allocated to each scheduler in a Deployment. Use the following table to determine how many AUs you need based on the size of your source Airflow environment.

| Environment size | AUs | CPU / memory |

|---|---|---|

| Small (Up to ~50 DAGs) | 5 | .5vCPU, 1.88GiB |

| Medium (Up to ~250 DAGs) | 10 | 1vCPU, 3.75GiB² |

| Large (Up to ~1000 DAGs) | 15 | 1.5vCPU, 5.64GiB² |

² Some of the following recommendations for CPU and memory are smaller than what you have in the equivalent source environment. Although you might not need more CPU or memory than what's recommended, some environments might parse DAGs slower than in your source Airflow environment to start. Use these recommendations as a starting point, then adjust your resource usage after tracking your performance on Astro.

<scheduler-count>: Specify the same number of schedulers as in your source Airflow environment.<cluster-name>: Specify name of the Astro cluster in which you want to create this Deployment.<workspace-name>: The name of the Workspace you created.<max-worker-count>/ <min-worker-count>: Specify the same minimum and maximum worker count as in source Airflow environment.<worker-type>: Specify the worker type for your default worker queue. You can see which worker types are available for your cluster in the Clusters menu of the Astro UI. If you did not customize the available worker types for your cluster, the default available worker types are:

| AWS | GCP | Azure |

|---|---|---|

| M5.XLARGE | E2-STANDARD-4 | STANDARD_D4D_V5 |

<alert-email>: Set an email to receive alerts from Astronomer support about your Deployments. See Set up Astro alerts.

After you finish entering these values, your config.yaml file should look something like the following:

deployment:

environment_variables:

- is_secret: true

key: MY_VARIABLE_KEY

value: MY_VARIABLE_VALUE

- is_secret: false

key: MY_VARIABLE_KEY_2

value: MY_VARIABLE_VALUE_2

configuration:

name: My Deployment

description: The Deployment I'm using for migration.

runtime_version: 6.3.0

dag_deploy_enabled: false

scheduler_au: 5

scheduler_count: 2

cluster_name: My Cluster

workspace_name: My Workspace

worker_queues:

- name: default

max_worker_count: 10

min_worker_count: 1

worker_concurrency: 16

worker_type: E2-STANDARD-4

alert_emails:

- myalertemail@cosmicenergy.org

-

Run the following command to push your configuration to Astro and create your Deployment:

astro deployment create --deployment-file config.yaml

Step 4: Use Starship to Migrate Airflow Connections and Variables

You might have defined Airflow connections and variables in the following places on your source Airflow environment:

- The Airflow UI (stored in the Airflow metadata database).

- Environment variables

- A secrets backend.

If you defined your Airflow variables and connections in the Airflow UI, you can migrate those to Astro with Starship. You can check which resources will be migrated by going to Admin > Variables and Admin > Connections in the Airflow UI to find your source Airflow environment information.

Some environment variables or Airflow Settings, like global environment variable values, can't be migrated to Astro. See Global environment variables for a list of variables that you can't migrate to Astro.

- Starship Plugin

- Starship operator

-

Log in to Astro. In the Astro UI, open the Deployment you're migrating to.

-

Click Open Airflow to open the Airflow UI for the Deployment. Copy the URL for the home page. It should look similar to

https://<your-organization>.astronomer.run/<id>/home. -

Create a Deployment API token for the Deployment. The token should minimally have permissions to update the Deployment and deploy code. Copy this token. See Create and manage Deployment API tokens for additional setup steps.

-

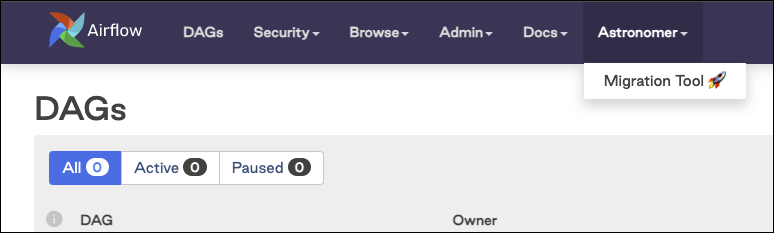

Open the Airflow UI for your source Airflow environment, then go to Astronomer > Migration Tool 🚀.

-

Ensure that the Astronomer Product toggle is set to Astro.

-

In the Airflow URL section, fill in the fields so that the complete URL on the page matches the URL of the Airflow UI for the Deployment you're migrating to.

-

Specify your API token in the Token field. Starship will confirm that it has access to your Deployment.

-

Click Connections. In the table that appears, click Migrate for each connection that you want to migrate to Astro. After the migration is complete, the status Migrated ✅ appears.

-

Click Pools. In the table that appears, click Migrate for each connection that you want to migrate to Astro. After the migration is complete, the status Migrated ✅ appears.

-

Click Variables. In the table that appears, click Migrate for each variable that you want to migrate to Astro. After the migration is complete, the status Migrated ✅ appears.

-

Click Environment variables. In the table that appears, check the box for each environment variable that you want to migrate to Astro, then click Migrate. After the migration is complete, the status Migrated ✅ appears.

-

Click DAG History. In the table that appears, check the box for each DAG whose history you want to migrate to Astro, then click Migrate. After the migration is complete, the status Migrated ✅ appears.

Refer to the Configuration detailed instructions on using the operator.

-

Log in to Astro. In the Astro UI, open the Deployment you're migrating to.

-

Click Open Airflow to open the Airflow UI for the Deployment. Copy the URL for the home page. It should look similar to

https://<your-organization>.astronomer.run/<id>/home. -

Create a Deployment API token for the Deployment. The token should minimally have permissions to update the Deployment and deploy code. Copy this token. See Create and manage Deployment API tokens for additional setup steps.

-

Add the following DAG to your source Airflow environment:

from airflow.models.dag import DAG

from astronomer.starship.operators import AstroMigrationOperator

from datetime import datetime

with DAG(

dag_id="astronomer_migration_dag",

start_date=datetime(1970, 1, 1),

schedule_interval=None,

) as dag:

AstroMigrationOperator(

task_id='export_meta',

deployment_url='{{ dag_run.conf["deployment_url"] }}',

token='{{ dag_run.conf["astro_token"] }}',

) -

Deploy this DAG to your source Airflow environment.

-

Once the DAG is available in the Airflow UI, click Trigger DAG, then click Trigger DAG w/ config.

-

In Configuration JSON, add the following configuration:

{

"deployment_url": "<your-deployment-url>",

"astro_token": "<your-astro-token>"

} -

Replace the following placeholder values:

<your-deployment-url>: The Deployment URL you copied in Step 2.<your-astro-token>: The token you copied in Step 3.

-

Click Trigger. After the DAG successfully runs, all connections, variables, and environment variables that are available from the Airflow UI are migrated to Astronomer.

Step 5: Create an Astro project

-

Create a new directory for your Astro project:

mkdir <your-astro-project-name> -

Open the directory:

cd <your-astro-project-name> -

Run the following Astro CLI command to initialize an Astro project in the directory:

astro dev initThis command generates a set of files that will build into a Docker image that you can both run on your local machine and deploy to Astro.

-

Add the following line to your Astro project

requirements.txtfile:astronomer-starshipWhen you deploy your code, this line installs the Starship migration tool on your Deployment so that you can migrate Airflow resources from your soure environment to Astro.

-

(Optional) Run the following command to initialize a new git repository for your Astro project:

git init

Step 6: Migrate project code and dependencies to your Astro project

- Manual

- gcloud CLI

-

Open your Astro project Dockerfile. Update the Runtime version in first line to the version you selected for your Deployment in Step 3. For example, if your Runtime version was 6.3.0, your Dockerfile would look like the following:

FROM quay.io/astronomer/astro-runtime:6.3.0The

Dockerfiledefines the environment where all your Airflow components run. You can modify it to include build-time arguments for your Airflow environment, such as environment variables or credentials. For this migration, you only need to modify the Dockerfile to update your Astro Runtime version. -

Open your Astro project

requirements.txtfile and add all Python packages that you installed in your source Airflow environment. See Google documentation for how to view a list of Python packages in your source Airflow environment.warningTo avoid breaking dependency upgrades, Astronomer recommends pinning your packages to the versions running in your soure Airflow environment. For example, if you're running

apache-airflow-providers-snowflakeversion 3.3.0 on Cloud composer, you would addapache-airflow-providers-snowflake==3.3.0to your Astrorequirements.txtfile. -

Open your Astro project

dagsfolder. Copy your DAG files todagsfrom either your source control platform or GCS Bucket. -

If you used the

pluginsfolder in your Cloud Composer storage bucket, copy the contents of this folder from your source control platform or GCS Bucket to your Astro project/pluginsfolder. -

If you used the

datafolder in Cloud Composer, copy the contents of that folder from your source control platform or GCS Bucket to your Astro projectincludefolder.

After you confirm that your Astro project has all necessary dependencies, deploy the project to your Astro Deployment.

-

Run the following command to authenticate to Astro:

astro login -

Run the following command to deploy your project

astro deployThis command returns a list of Deployments available in your Workspace and prompts you to pick one.

-

Open your Astro project Dockerfile. Update the Runtime version in first line to the version you selected for your Deployment in Step 3. For example, if your Runtime version was 6.3.0, your Dockerfile would look like the following:

FROM quay.io/astronomer/astro-runtime:6.3.0The

Dockerfiledefines the environment where all your Airflow components run. You can modify it to include build-time arguments for your Airflow environment, such as environment variables or credentials. For this migration, you only need to modify the Dockerfile to update your Astro Runtime version. -

Open your Astro project in your terminal. Run the following command to copy your PyPI packages from GCC to your

requirements.txtfile:gcloud composer environments describe <your-gcc-project-name> --format="value(config.softwareConfig.pypiPackages)" > requirements.txtReview the output in

requirements.txtafter running this command to ensure that all packages were imported on their own line of text.warningTo avoid breaking dependency upgrades, Astronomer recommends pinning your packages to the versions running in your soure Airflow environment. For example, if you're running

apache-airflow-providers-snowflakeversion 3.3.0 on Cloud composer, you would addapache-airflow-providers-snowflake==3.3.0to your Astrorequirements.txtfile. -

Run the following command to copy your DAGs from Cloud Composer to your

dagsfolder:gcloud composer environments storage dags export --destination=dagsReview your DAG files in

dagsafter running this command to ensure that all DAGs were successfully exported. -

If you utilized the

pluginsfolder in your Cloud Composer storage bucket, run the following command to copy yourpluginsfolder contents to your Astro project:gcloud composer environments storage plugins export --destination=pluginsReview the contents of your Astro project

pluginsfolder to ensure that all files were successfully exported. -

If you utilized the

datafolder in Cloud Composer, run the following command to copy yourdatafolder contents to your Astro project:gcloud composer environments storage data export --destination=include

After you confirm that your Astro project has all necessary dependencies, deploy the project to your Astro Deployment.

-

Run the following command to authenticate to Astro:

astro login -

Run the following command to deploy your project

astro deployThis command returns a list of Deployments available in your Workspace and prompts you to pick one.

Step 7: Configure additional data pipeline infrastructure

The core migration of your project is now complete. Read the following topics to see whether you need to set up any additional infrastructure on Astro before cutting over your DAGs.

Set up CI/CD

If you used CI/CD to deploy code to your source Airflow environment, read the following documentation to learn about setting up a similar CI/CD pipeline for your Astro project:

Similarly to GCC, you can deploy DAGs to Astro directly from a Google Cloud Storage (GCS) bucket. See Deploy DAGs to from Google Cloud Storage to Astro.

Set up a secrets backend

If you currently store Airflow variables or connections in a secrets backend, you need to integrate your secrets backend with Astro to access those objects from your migrated DAGs. See Configure a Secrets Backend for setup steps.

Instance permissions and trust policies

You can utilize Workload Identity or Service Account Keys to grant your Astro Deployment the same level of access to Google Services as your source Airflow environment. See Connect GCP - Authorization options.

Step 8: Test locally and check for import errors

Depending on how thoroughly you want to test your Airflow environment, you have a few options for testing your project locally before deploying to Astro.

- In your Astro project directory, run

astro dev parseto check for any parsing errors in your DAGs. - Run

astro run <dag-id>to test a specific DAG. This command compiles your DAG and runs it in a single Airflow worker container based on your Astro project configurations. - Run

astro dev startto start a complete Airflow environment on your local machine. After your project starts up, you can access the Airflow UI atlocalhost:8080. See Troubleshoot your local Airflow environment.

Note that your migrated Airflow variables and connections are not available locally. You must deploy your project to Astro to test these resources.

Step 9: Deploy to Astro

-

Run the following command to authenticate to Astro:

astro login -

Run the following command to deploy your project

astro deployThis command returns a list of Deployments available in your Workspace and prompts you to pick one.

-

In the Astro UI, open your Deployment and click Open Airflow. Confirm that you can see your deployed DAGs in the Airflow UI.

Step 10: Cut over from your source Airflow environment to Astro

After you successfully deploy your code to Astro, you need to migrate your workloads from your source Airflow environment to Astro on a DAG-by-DAG basis. Depending on how your workloads are set up, Astronomer recommends letting DAG owners determine the order to migrate and test DAGs.

You can complete the following steps in the few days or weeks following your migration set up. Provide updates to your Astronomer Data Engineer as they continue to assist you through the process and any solve any difficulties that arise.

Continue to validate and move your DAGs until you have fully cut over your source Airflow instance. After you finish migrating from your source Airflow environment, repeat the complete migration process for any other Airflow instances in your source Airflow environment.

Confirm connections and variables

In the Airflow UI for your Deployment, test all connections that you migrated from your source Airflow environment.

Additionally, check Airflow variable values in Admin > Variables.

Test and validate DAGs in Astro

To create a strategy for testing DAGs, determine which DAGs need the most care when running and testing them.

If your DAG workflow is idempotent and can run twice or more without negative effects, you can run and test these DAGs with minimal risk. If your DAG workflow is non-idempotent and can become invalid when you rerun it, you should test the DAG with more caution and downtime.

Cut over DAGs to Astro using Starship

Starship includes features for simultaneously pausing DAGs in your source Airflow environment and starting them on Astro. This allows you to cut over your production workflows without downtime.

- Starship Plugin

- Starship Operator

For each DAG in your Astro Deployment:

-

Confirm that the DAG ID in your Deployment is the same as the DAG ID in your source Airflow environment.

-

In the Airflow UI for your source Airflow environment, go to Astronomer > Migration Tool 🚀.

-

Click DAGs cutover. In the table that appears, click the Pause icon in the Local column for the DAG you're cutting over.

-

Click the Start icon in the Remote column for the DAG you're cutting over.

-

After completing this cutover, the Start and Pause icons switch. If there's an issue after cutting over, click the Remote pause button and then the Local start button to move your workflow back to your source Airflow environment.

The Starship operator does not contain cut-over functionality.

To cut over a DAG, pause the DAG in the source Airflow and unpause the DAG in Astro. Keep both Airflow environments open as you test and ensure that the cutover was successful.

Optimize Deployment resource usage

Review DAG development features

Astro includes several features that enhance the Apache Airflow development experience, from DAG writing to testing. To make the most of these features, you might want to make adjustments to your exisitng DAG development workflows.

As you get started on Astro, review the list of features and changes that Astro brings to the Airflow development experience and consider how you want to implement these details in your development experience. See Write and run DAGs on Astro.

Monitor analytics

As you cut over DAGs, view Deployment metrics to get a sense of how many resources your Deployment is using. Use this information to adjust your worker queues and resource usage accordingly, or to tell when a DAG isn't running as expected.

Modify instance types or use worker queues

If your current worker type doesn't have the right amount of resources for your workflows, see Deployment settings to learn about configuring worker types on your Deployments.

You can additionally configure worker queues to assign each of your tasks to different worker instance types. View your Deployment metrics to help you determine what changes are required.

Enable DAG-only deploys

Deploying to Astro with DAG-only deploys enabled can make deploys faster in cases where you've only modified your dags directory. To enable the DAG-only deploy feature, see Deploy DAGs only.