Deploy an Astro project as an image

In a full deploy, the Astro CLI takes every file in your Astro project to builds them into a Docker image. This includes your Dockerfile, DAGs, plugins, and all Python and OS-level packages. The CLI then deploys the image to all Airflow components in a Deployment.

Use this document to learn how full deploys work and how to manually push your Astro project to a Deployment. For production environments, Astronomer recommends automating all code deploys with CI/CD. See Choose a CI/CD strategy.

See DAGs-only Deploys to learn more about how to deploy your DAGs and images separately.

Prerequisites

- The Astro CLI is installed in an empty directory. If you're using an Apple M1 system with Astro Runtime 6.0.4 or later for local development, you must install Astro CLI 1.4.0 or later to deploy to Astro.

- An Astro Workspace with at least one Deployment.

- An Astro project.

- Docker or Podman.

Step 1: Authenticate to Astro

Run the following command to authenticate to Astro:

astro login

After running this command, you are prompted to open your web browser and log in to the Astro UI. After you complete this login, you are automatically authenticated to the CLI.

If you have API credentials set as OS-level environment variables on your local machine, you can deploy directly to Astro without needing to manually authenticate. This setup is required for automating code deploys with CI/CD.

Step 2: Push your Astro project to an Astro Deployment

To deploy your Astro project, run:

astro deploy

This command returns a list of Deployments available in your Workspace and prompts you to pick one.

After you select a Deployment, the CLI parses your DAGs and runs a suite of pytests to ensure that they don't contain basic errors. This testing process is equivalent to running astro dev parse and astro dev pytest in a local Airflow environment. If any of your DAGs fail this testing process, the deploy to Astro also fails. To force a deploy even if your project has errors, you can run astro deploy --force. For more information about using pytests, see Troubleshoot your local Airflow environment and Testing Airflow DAGs.

If your code passes the testing phase, the Astro CLI deploys your project in two separate, simultaneous processes:

- The Astro CLI uploads your

dagsdirectory to Astronomer-hosted blob storage. Your Deployment downloads the DAGs from the blob storage and applies the code to all of its running Airflow containers. - The Astro CLI builds all other project files into a Docker image and deploys this to an Astronomer-hosted Docker registry. The Deployment then applies the image to all of its running Airflow containers.

See What happens during a project deploy for a more detailed description of how the Astro CLI and Astro work together to deploy your code.

If you use Docker Desktop, ensure that the Use containerd for pulling and storing images setting is turned off. Otherwise, you might receive errors when you run astro deploy such as:

Push access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed

# or

Unable to find image 'barren-ionization-0185/airflow:latest' locally

Error response from daemon: pull access denied for barren-ionization-0185/airflow, repository does not exist or may require 'docker login'

Step 3: Validate your changes

When you start a code deploy to Astro, the status of the Deployment is DEPLOYING until it is determined that the underlying Airflow components are running the latest version of your code. During this time, you can hover over the status indicator to determine whether your entire Astro project or only DAGs were deployed .

When the deploy completes, the Docker Image field in the Astro UI are updated depending on the type of deploy you completed.

- The Docker Image field displays a unique identifier generated by a Continuous Integration (CI) tool or a timestamp generated by the Astro CLI after you complete an image deploy.

To confirm a deploy was successful, verify that the running versions of your Docker image and DAG bundle have been updated.

- In the Astro UI, select a Workspace and then select a Deployment.

- Review the information in the Docker Image and DAG bundle version field to determine the Deployment code version.

What happens during a project deploy

Read this section for a more detailed description of the project deploy process.

Your Deployment uses the following components to process your code deploy:

- A proprietary operator for deploying Docker images to your Airflow containers

- A sidecar for downloading DAGs attached to each Airflow component container

- A blob storage container hosted by Astronomer

When you run astro deploy, the Astro CLI deploys all non-DAG files in your project as an image to an Astronomer-hosted Docker registry. The proprietary operator pulls the images from a Docker registry, then updates the running image for all Airflow containers in your Deployment. DAG changes are deployed through a separate and simultaneous process:

- The Astro CLI uploads your

dagsfolder to the Deployment's blob storage. - The DAG downloader sidecars download the new DAGs from blob storage.

This process is different if your Deployment has DAG-only deploys disabled, which is the default setting for all Astro Hybrid Deployments. See Enable/disable DAG-only deploys on a Deployment for how the process changes when DAG-only deploys are disabled.

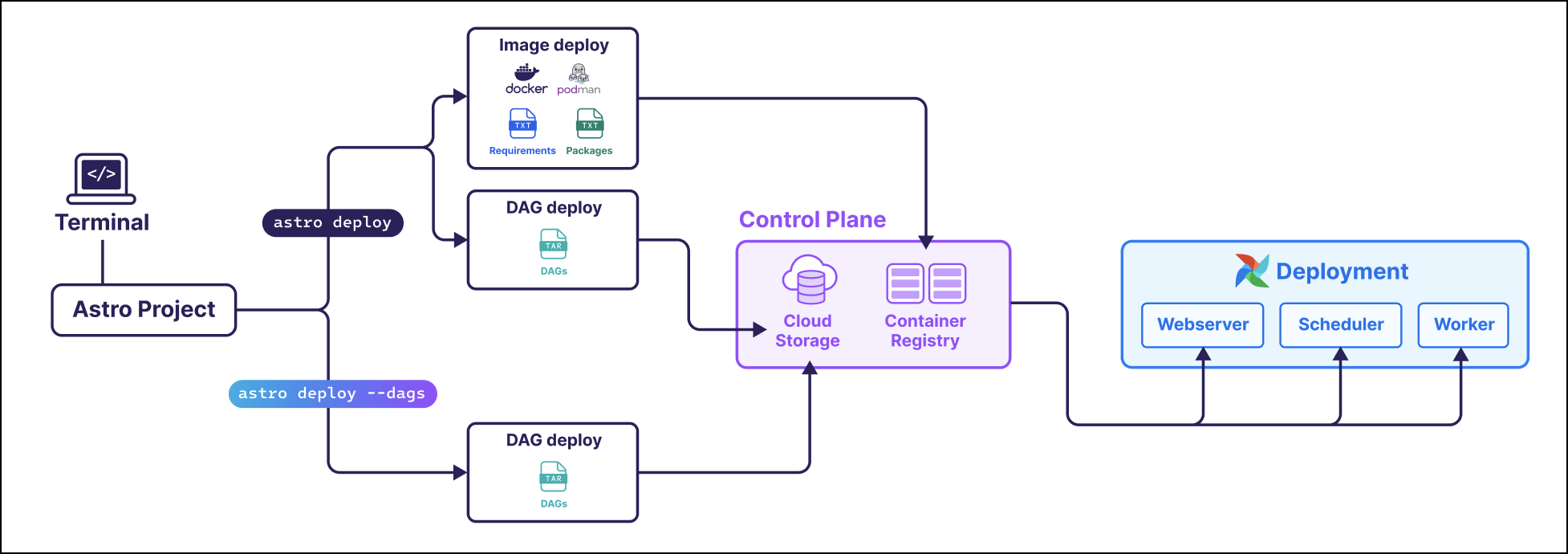

Use the following diagram to understand the relationship between Astronomer, your local machine, and your Deployment:

How Deployments handle code deploys

After a Deployment receives the deploy, Astro gracefully terminates all of its containers except for the Airflow webserver and any Celery workers or Kubernetes worker Pods that are currently running tasks. All new workers run your new code.

If you deploy code to a Deployment that is running a previous version of your code, then the following happens:

-

Tasks that are

runningcontinue to run on existing workers and are not interrupted unless the task does not complete within 24 hours of the code deploy. -

One or more new workers are created alongside your existing workers and immediately start executing scheduled tasks based on your latest code.

These new workers execute downstream tasks of DAG runs that are in progress. For example, if you deploy to Astronomer when

Task Aof your DAG is running,Task Acontinues to run on an old Celery worker. IfTask BandTask Care downstream ofTask A, they are both scheduled on new Celery workers running your latest code.This means that DAG runs could fail due to downstream tasks running code from a different source than their upstream tasks. DAG runs that fail this way need to be fully restarted from the Airflow UI so that all tasks are executed based on the same source code.

Astronomer sets a grace period of 24 hours for all workers to allow running tasks to continue executing. This grace period is not configurable. If a task does not complete within 24 hours, its worker is terminated. Airflow marks the task as a zombie and it retries according to the task's retry policy. This is to ensure that our team can reliably upgrade and maintain Astro as a service.

If you want to force long-running tasks to terminate sooner than 24 hours, specify an execution_timeout in your DAG's task definition.

Alternatively, if you find you have many long-running tasks that exceed 24 hours, you might want to refactor your DAGs using deferrable operators, as Astro does not guarantee reliability for tasks with a duration that exceeds 24 hours.

Deploy a prebuilt Docker image

By default, running astro deploy with the Astro CLI builds your Astro project into a Docker image and deploys it to Astro. In some cases, you might want to skip the build step and deploy a prebuilt Docker image instead.

Deploying a prebuilt Docker image allows you to:

- Test a single Docker image across Deployments instead of rebuilding it each time.

- Reduce the time it takes to deploy. If your Astro project has a number of packages that take a long time to install, it can be more efficient to build it separately.

- Specify additional mounts and arguments in your project, which is required for setups such as installing Python packages from private sources.

To deploy your Astro project as a prebuilt Docker image:

-

Run

docker buildfrom an Astro project directory or specify the command in a CI/CD pipeline. This Docker image must be based on Astro Runtime and be available in a local Docker registry. If you run this command on an Apple M1 computer or on a computer with an ARM64 processor, you must specify--platform=linux/amd64or else the deploy will fail. Astro Deployments require an AMD64-based image and do not support ARM64 architecture. -

(Optional) Test your Docker image in a local Airflow environment by adding the

--image-name <image-name>flag to any of the following commands:astro dev startastro dev restartastro dev parseastro dev pytest

-

Run

astro deploy --image-name <image-name>or specify the command in a CI/CD pipeline.

If you have DAG-only deploys enabled, you can also use the --image flag to deploy a prebuilt image without also deploying your DAGs folder. Use astro deploy --image-name <image-name> --image.

For more information about the --image-name option, see the CLI command reference.

If you build an AMD64-based image and run astro deploy from an Apple M1 computer, you might see a warning in your terminal. You can ignore the warning.

WARNING: The requested image's platform (linux/amd64) does not match the detected host platform

(linux/arm64/v8) and no specific platform was requested