Debug DAGs

This guide explains how to identify and resolve common Airflow DAG issues. It also includes resources to try out if you can't find a solution to an Airflow issue. While the focus of the troubleshooting steps provided lies on local development, much of the information is also relevant for running Airflow in a production context.

Consider implementing systematic testing of your DAGs to prevent common issues. See the Test Airflow DAGs guide.

There are multiple resources for learning about this topic. See also:

- Webinar: Debugging your Airflow DAGs.

- Astronomer Academy: Airflow: Debug DAGs.

Assumed knowledge

To get the most out of this guide, you should have an understanding of:

- Basic Airflow concepts. See Get started with Airflow tutorial.

- Basic knowledge of Airflow DAGs. See Introduction to Airflow DAGs.

General Airflow debugging approach

To give yourself the best possible chance of fixing a bug in Airflow, contextualize the issue by asking yourself the following questions:

- Is the problem with Airflow, or is it with an external system connected to Airflow? Test if the action can be completed in the external system without using Airflow.

- What is the state of your Airflow components? Inspect the logs of each component and restart your Airflow environment if necessary.

- Does Airflow have access to all relevant files? This is especially relevant when running Airflow in Docker or when using the Astro CLI.

- Are your Airflow connections set up correctly with correct credentials? See Troubleshooting connections.

- Is the issue with all DAGs, or is it isolated to one DAG?

- Can you collect the relevant logs? For more information on log location and configuration, see the Airflow logging guide.

- Which versions of Airflow and Airflow providers are you using? Make sure that you're using the correct version of the Airflow documentation.

- Can you reproduce the problem in a new local Airflow instance using the Astro CLI?

Answering these questions will help you narrow down what kind of issue you're dealing with and inform your next steps.

You can debug your DAG code with IDE debugging tools using the dag.test() method. See Debug interactively with dag.test().

Airflow is not starting on the Astro CLI

The 3 most common ways to run Airflow locally are using the Astro CLI, running a standalone instance, or running Airflow in Docker. This guide focuses on troubleshooting the Astro CLI, which is an open source tool for quickly running Airflow on a local machine.

The most common issues related to the Astro CLI are:

- The Astro CLI was not correctly installed. Run

astro versionto confirm that you can successfully run Astro CLI commands. If a newer version is available, consider upgrading. - The Docker Daemon is not running. Make sure to start Docker Desktop before starting the Astro CLI.

- There are errors caused by custom commands in the Dockerfile, or dependency conflicts with the packages in

packages.txtandrequirements.txt. - Airflow components are in a crash-loop because of errors in custom plugins or XCom backends. View scheduler logs using

astro dev logs -sto troubleshoot.

To troubleshoot infrastructure issues when running Airflow on other platforms, for example in Docker, on Kubernetes using the Helm Chart or on managed services, please refer to the relevant documentation and customer support.

You can learn more about testing and troubleshooting locally with the Astro CLI in the Astro documentation.

Common DAG issues

This section covers common issues related to DAG code that you might encounter when developing.

DAGs don't appear in the Airflow UI

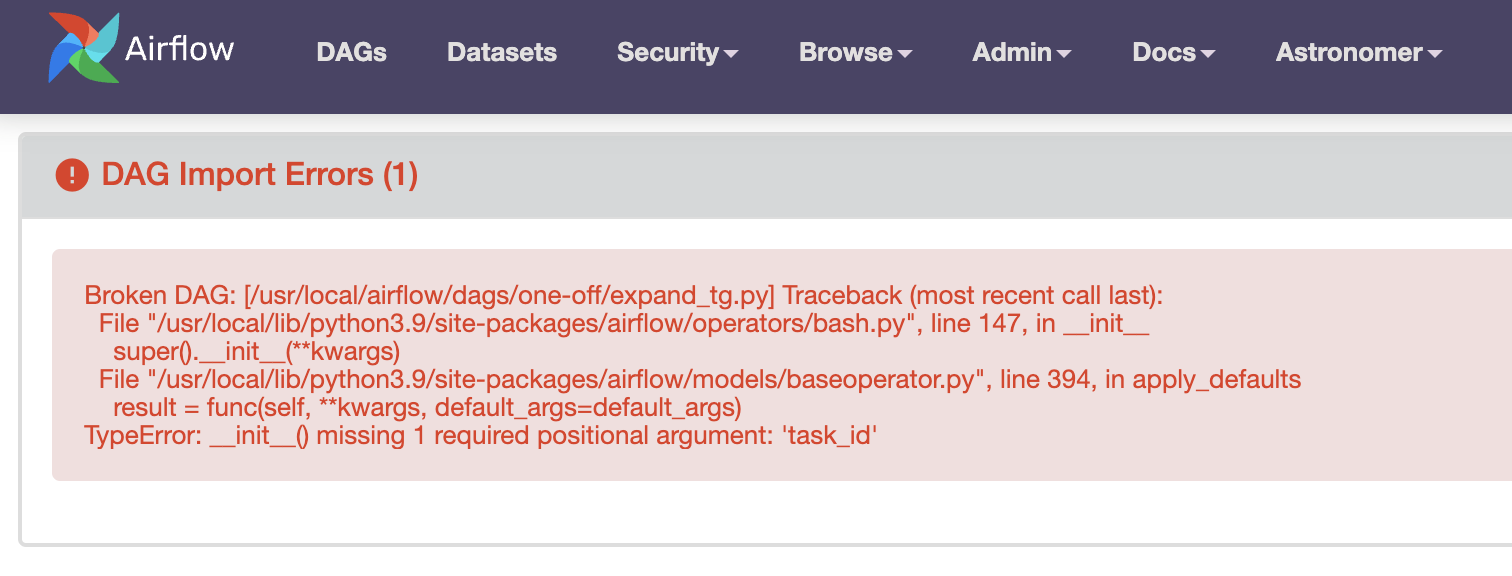

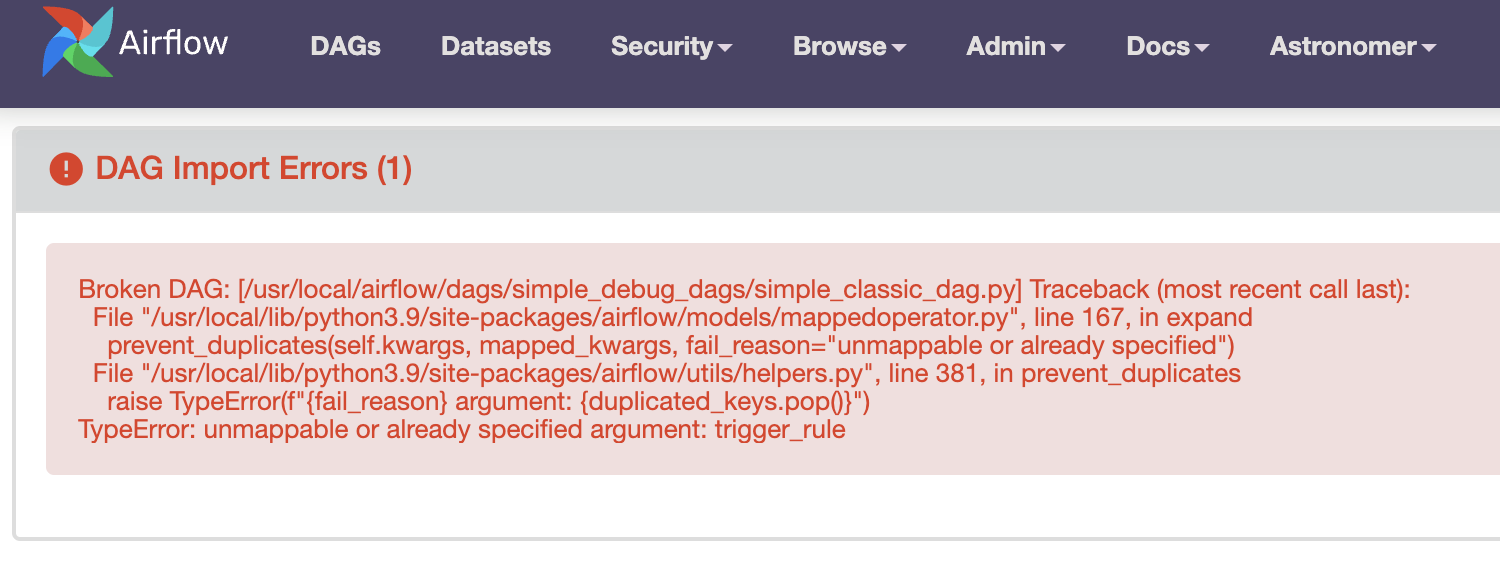

If a DAG isn't appearing in the Airflow UI, it's typically because Airflow is unable to parse the DAG. If this is the case, you'll see an Import Error in the Airflow UI.

The message in the import error can help you troubleshoot and resolve the issue.

To view import errors in your terminal, run astro dev run dags list-import-errors with the Astro CLI, or run airflow dags list-import-errors with the Airflow CLI.

If you don't see an import error message but your DAGs still don't appear in the UI, try these debugging steps:

-

Make sure all of your DAG files are located in the

dagsfolder. -

Airflow scans the

dagsfolder for new DAGs everydag_dir_list_interval, which defaults to 5 minutes but can be modified. You might have to wait until this interval has passed before a new DAG appears in the Airflow UI or restart your Airflow environment. -

Ensure that you have permission to see the DAGs, and that the permissions on the DAG file are correct.

-

Run

astro dev run dags listwith the Astro CLI orairflow dags listwith the Airflow CLI to make sure that Airflow has registered the DAG in the metadata database. If the DAG appears in the list but not in the UI, try restarting the Airflow webserver. -

Try restarting the Airflow scheduler with

astro dev restart. -

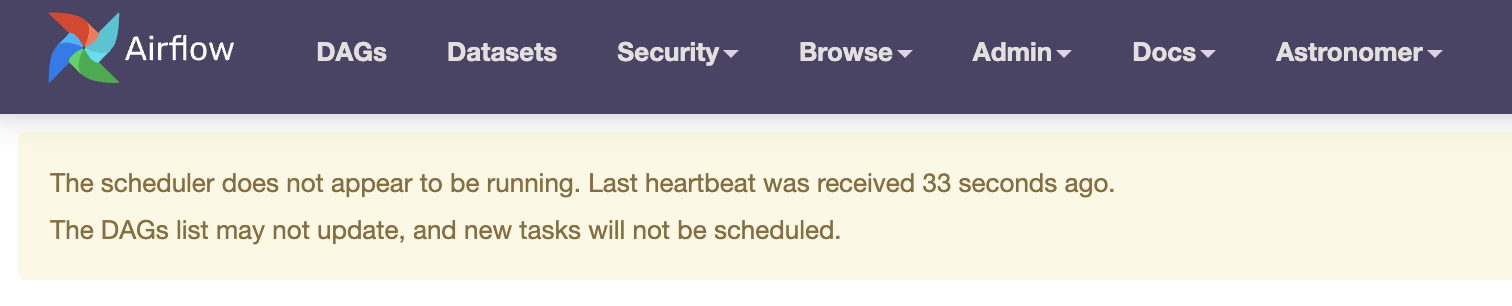

If you see an error in the Airflow UI indicating that the scheduler is not running, check the scheduler logs to see if an error in a DAG file is causing the scheduler to crash. If you are using the Astro CLI, run

astro dev logs -sand then try restarting.

At the code level, ensure that each DAG:

- Has a unique

dag_id. - Contains either the word

airflowor the worddag. The scheduler only parses files fulfilling this condition. - Is called when defined with the

@dagdecorator. See also Introduction to Airflow decorators.

Note that in Airflow 2.10+, you can configure an Airflow listener as a plugin to run any Python code, either when a new import error appears (on_new_dag_import_error) or when the dag processor finds a known import error (on_existing_dag_import_error). See Airflow listeners for more information.

Import errors due to dependency conflicts

A frequent cause of DAG import errors is not having the necessary packages installed in your Airflow environment. You might be missing provider packages that are required for using specific operators or hooks, or you might be missing Python packages used in Airflow tasks.

In an Astro project, you can install OS-level packages by adding them to your packages.txt file. You can install Python-level packages, such as provider packages, by adding them to your requirements.txt file. If you need to install packages using a specific package manager, consider doing so by adding a bash command to your Dockerfile.

To prevent compatibility issues when new packages are released, Astronomer recommends pinning a package version to your project. For example, adding astronomer-providers[all]==1.14.0 to your requirements.txt file ensures that no future releases of astronomer-providers causes compatibility issues. If no version is pinned, Airflow will always use the latest available version.

If you are using the Astro CLI, packages are installed in the scheduler Docker container. You can confirm that a package is installed correctly by running:

astro dev bash --scheduler "pip freeze | grep <package-name>"

If you have conflicting package versions or need to run multiple Python versions, you can run tasks in different environments using a few different operators:

- KubernetesPodOperator: Runs a task in a separate Kubernetes Pod.

- ExternalPythonOperator: Runs a task in a predefined virtual environment.

- PythonVirtualEnvOperator: Runs a task in a temporary virtual environment.

If many Airflow tasks share a set of alternate package and version requirements a common pattern is to run them in two or more separate Airflow deployments.

DAGs are not running correctly

If your DAGs are either not running or running differently than you intended, consider checking the following common causes:

-

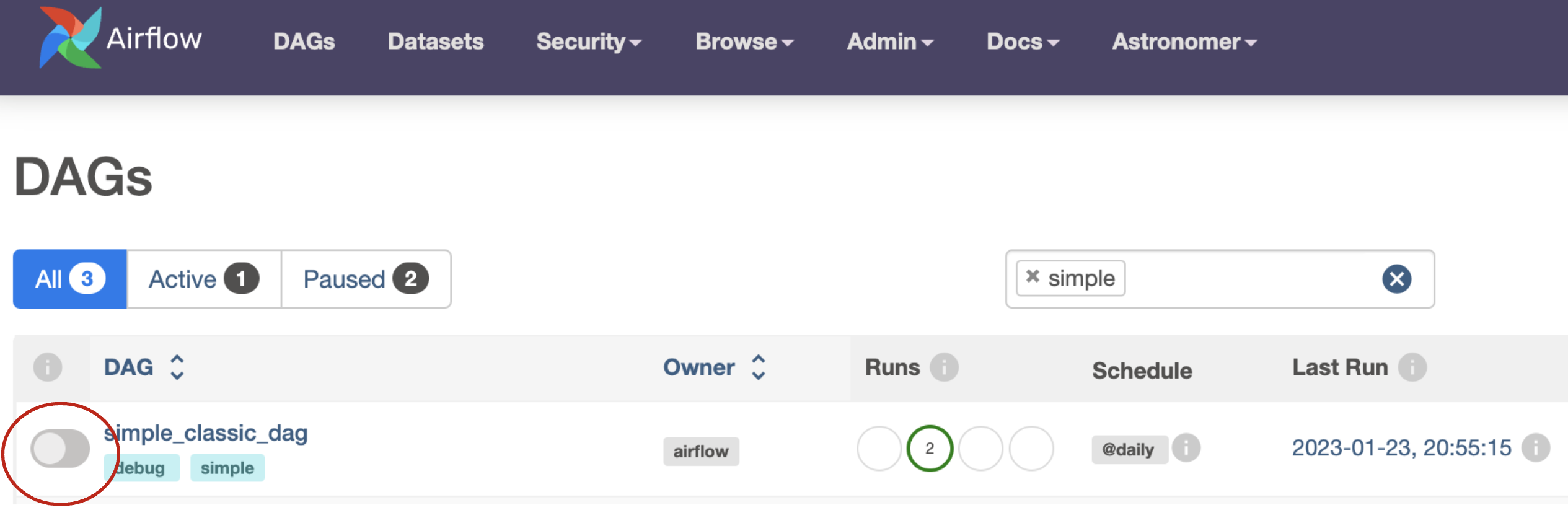

DAGs need to be unpaused in order to run on their schedule. You can unpause a DAG by clicking the toggle on the left side of the Airflow UI or by using the Airflow CLI.

If you want all DAGs unpaused by default, you can set

dags_are_paused_at_creation=Falsein your Airflow config. If you do this, remember to setcatchup=Falsein your DAGs to prevent automatic backfilling of DAG runs. Paused DAGs are unpaused automatically when you manually trigger them. -

Double check that each DAG has a unique

dag_id. If two DAGs with the same id are present in one Airflow instance the scheduler will pick one at random every 30 seconds to display. -

Make sure your DAG has a

start_datein the past. A DAG with astart_datein the future will result in a successful DAG run with no task runs. Do not usedatetime.now()as astart_date. -

Test the DAG using

astro dev dags test <dag_id>. With the Airflow CLI, runairflow dags test <dag_id>. -

If no DAGs are running, check the state of your scheduler using

astro dev logs -s. -

If too many runs of your DAG are being scheduled after you unpause it, you most likely need to set

catchup=Falsein your DAG's parameters.

If your DAG is running, but not on the schedule you expected, review the DAG Schedule DAGs in Airflow guide. If you are using a custom timetable, ensure that the data interval for your DAG run does not precede the DAG start date.

Common task issues

This section covers common issues related to individual tasks you might encounter. If your entire DAG is not working, see the DAGs are not running correctly section above.

Tasks are not running correctly

It is possible for a DAG to start but its tasks to be stuck in various states or to not run in the desired order. If your tasks are not running as intended, try the following debugging methods:

- Double check that your DAG's

start_dateis in the past. A futurestart_datewill result in a successful DAG run even though no tasks ran. - If your tasks stay in a

scheduledorqueuedstate, ensure your scheduler is running properly. If needed, restart the scheduler or increase scheduler resources in your Airflow infrastructure. - If your tasks have the

depends_on_pastparameter set toTrue, those newly added tasks won't run until you set the state of prior task runs. - When running many instances of a task or DAG, be mindful of scaling parameters and configurations. Airflow has default settings that limit the amount of concurrently running DAGs and tasks. See Scaling Airflow to optimize performance to learn more.

- If you are using task decorators and your tasks are not showing up in the Graph and Grid view, make sure you are calling your tasks. See also Introduction to Airflow decorators.

- Check your task dependencies and trigger rules. See Manage DAG and task dependencies in Airflow and Airflow trigger rules. Consider recreating your DAG structure with EmptyOperators to ensure that your dependencies are structured as expected.

- The

task_queued_timeoutparameter controls how long tasks can be in queued state before they are either retried or marked as failed. The default is 600 seconds. - If you are using the CeleryExecutor in an Airflow version earlier than 2.6 and tasks get stuck in the

queuedstate, consider turning onstalled_task_timeout.

Tasks are failing

Most task failure issues fall into one of 3 categories:

- Issues with operator parameter inputs.

- Issues within the operator.

- Issues in an external system.

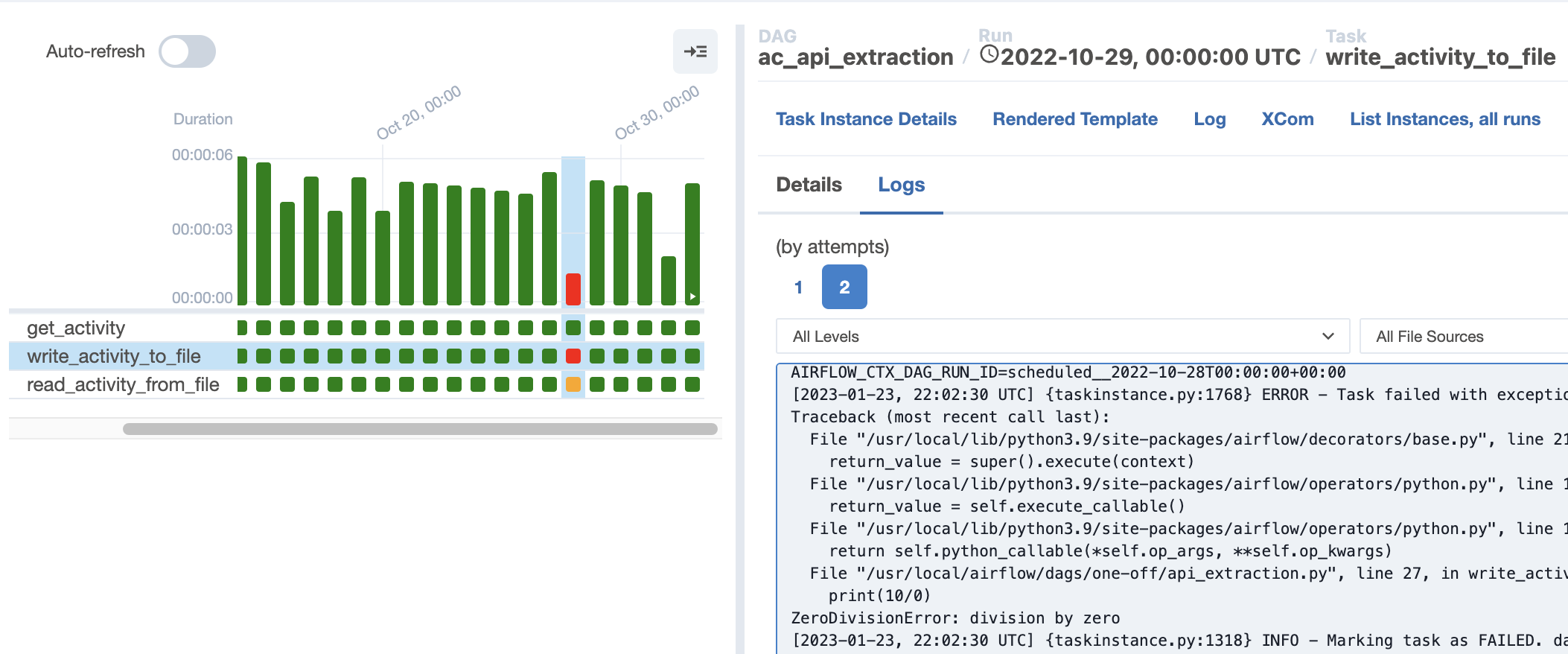

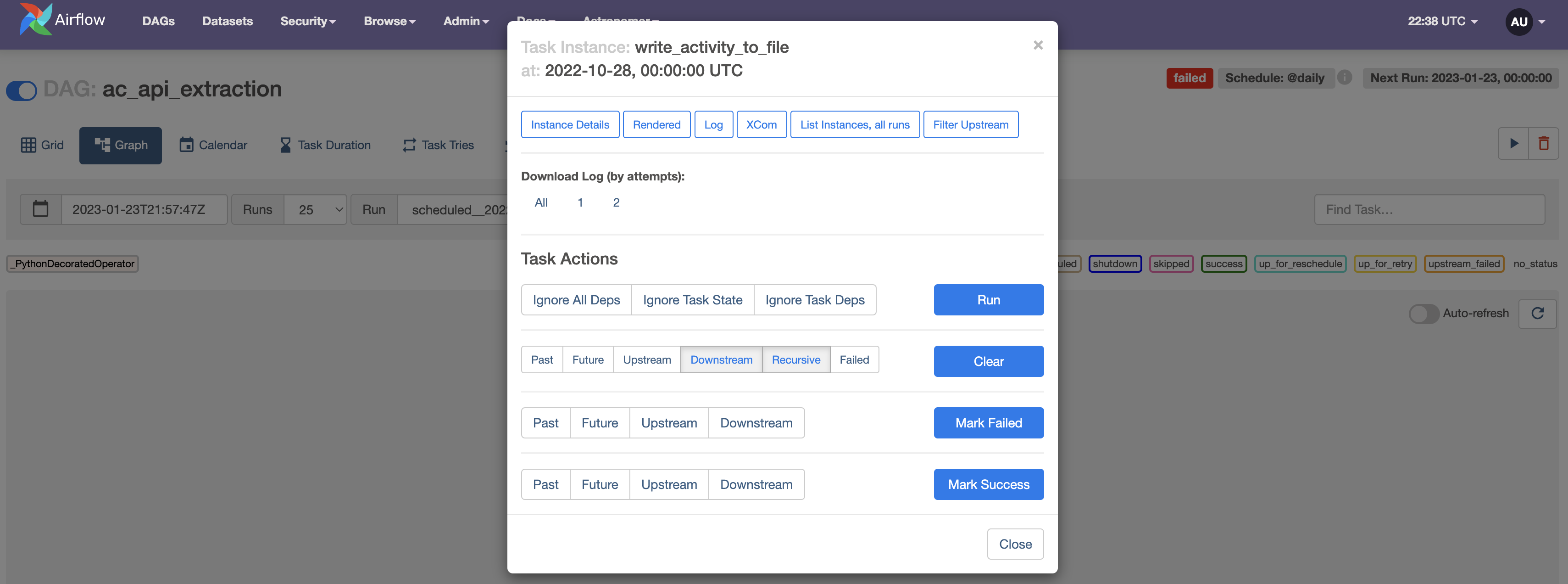

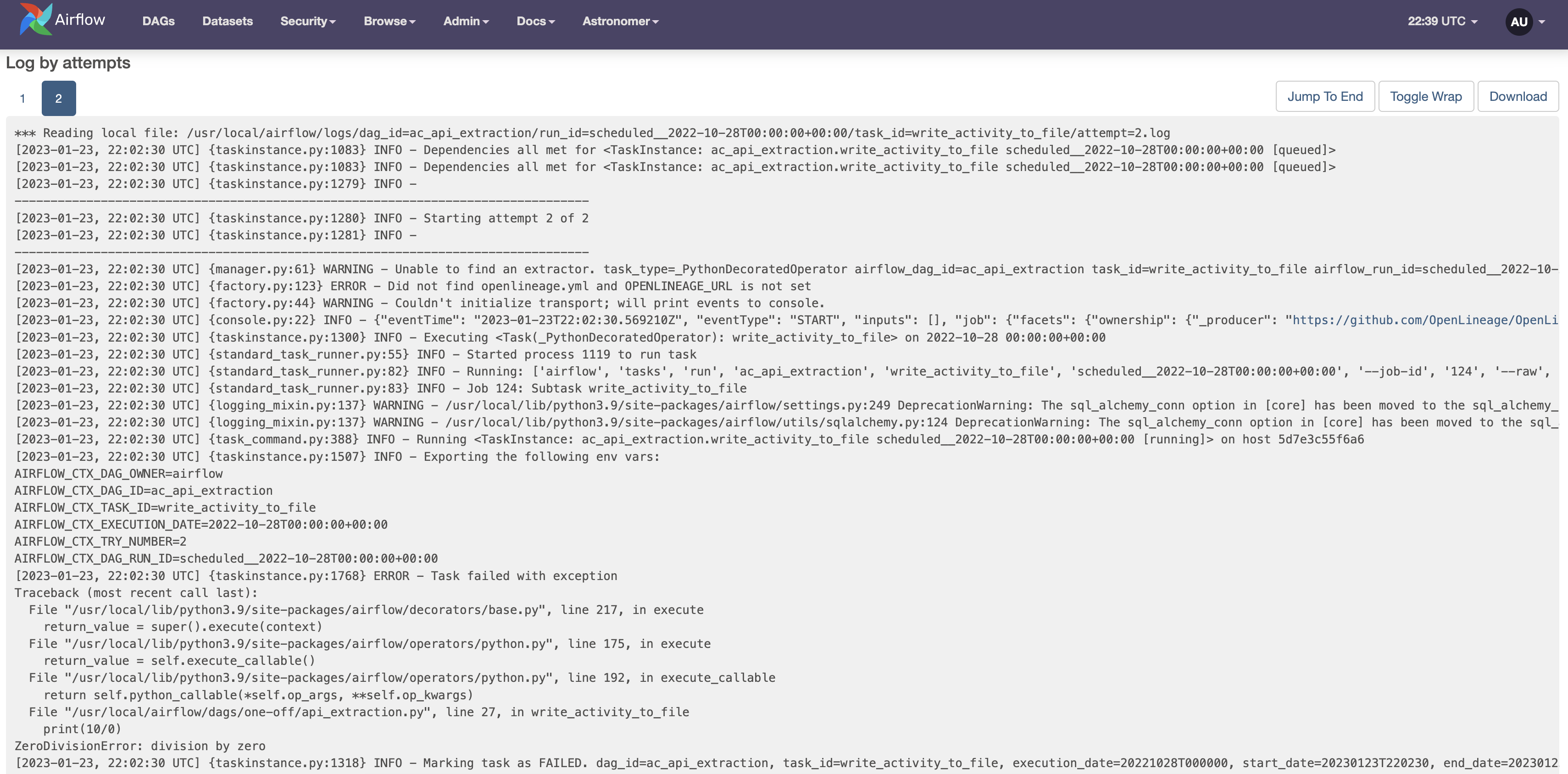

Failed tasks appear as red squares in the Grid view, where you can also directly access task logs.

Task logs can also be accessed from the Graph view and have plenty of configuration options. See Airflow Logging.

The task logs provide information about the error that caused the failure.

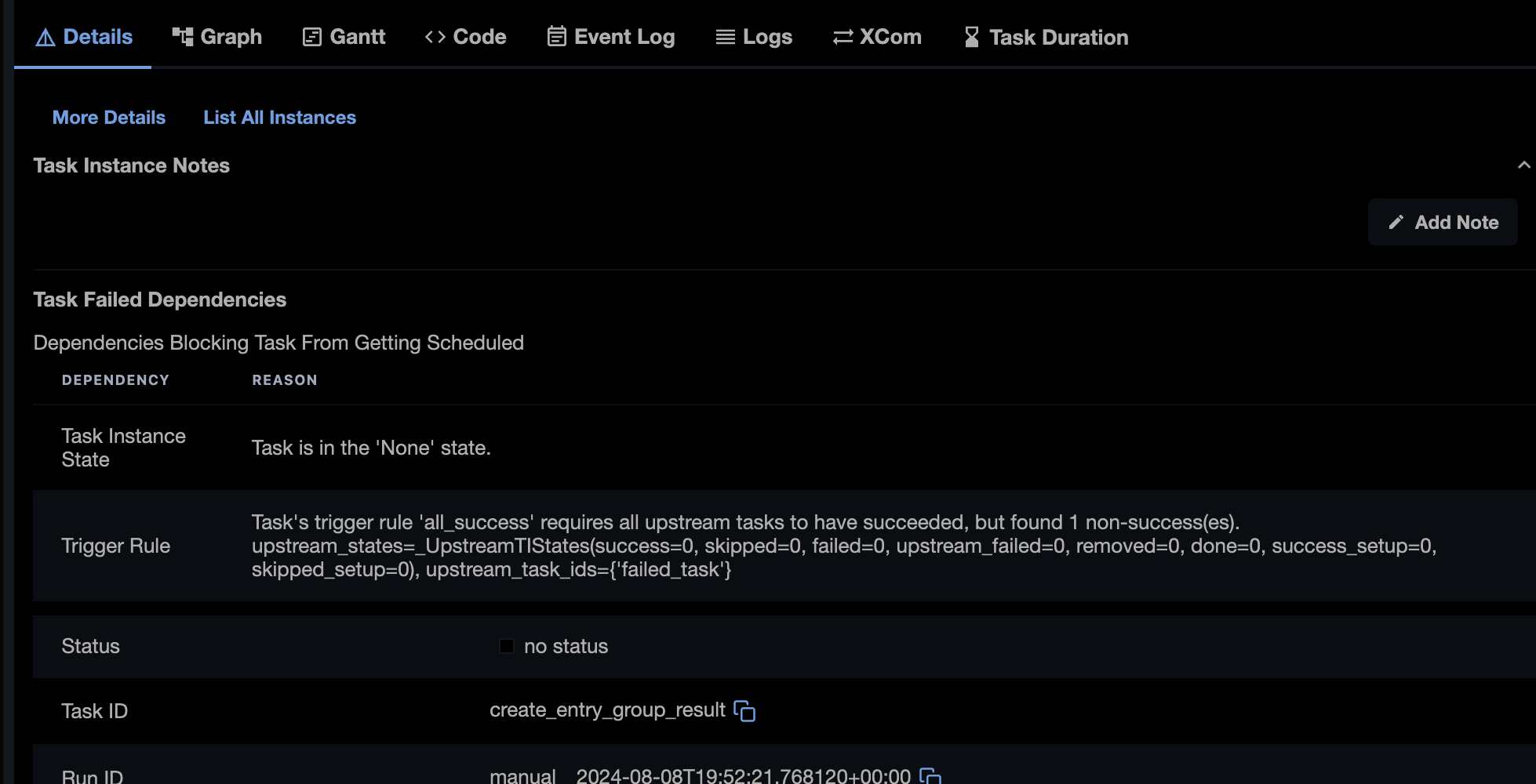

Starting with version 2.10, the Details tab displays information about dependencies causing a task to fail or not to run (Task Failed Dependencies). If available, this information is displayed for any task in a scheduled or None state.

To help identify and resolve task failures, you can set up error notifications. See Error Notifications in Airflow.

Task failures in newly developed DAGs with error messages such as Task exited with return code Negsignal.SIGKILL or containing a -9 error code are often caused by a lack of memory. Increase the resources for your scheduler, webserver, or pod, depending on whether you're running the Local, Celery, or Kubernetes executors respectively.

After resolving your issue you may want to rerun your DAGs or tasks, see Rerunning DAGs.

Issues with dynamically mapped tasks

Dynamic task mapping is a powerful feature that allows you to dynamically adjust the number of tasks at runtime based on changing input parameters. It is also possible to dynamically map over task groups.

Possible causes of issues when working with dynamically mapped tasks include:

-

You did not provide a keyword argument to the

.expand()function. -

When using

.expand_kwargs(), you did not provide mapped parameters in the form of aList(Dict). -

You tried to map over an empty list, which causes the mapped task to be skipped.

-

You exceeded the limit for how many mapped task instances you can create. This limit depends on the Airflow core config

max_map_lengthand is 1024 by default. -

The number of mapped task instances of a specific task that can run in parallel across all runs of a given DAG depend on the task level parameter

max_active_tis_per_dag. -

Not all parameters are mappeable. If a parameter does not support mapping you will receive an Import Error like in the screenshot below.

When creating complex patterns with dynamically mapped tasks, we recommend first creating your DAG structure using EmptyOperators or decorated Python operators. Once the structure works as intended, you can start adding your tasks. Refer to the Create dynamic Airflow tasks guide for code examples.

It is very common that the output of an upstream operator is in a slightly different format than what you need to map over. Use .map() to transform elements in a list using a Python function.

Missing Logs

When you check your task logs to debug a failure, you may not see any logs. On the log page in the Airflow UI, you may see a spinning wheel, or you may just see a blank file.

Generally, logs fail to appear when a process dies in your scheduler or worker and communication is lost. The following are some debugging steps you can try:

- Try rerunning the task by clearing the task instance to see if the logs appear during the rerun.

- Increase your

log_fetch_timeout_secparameter to greater than the 5 second default. This parameter controls how long the webserver waits for the initial handshake when fetching logs from the worker machines, and having extra time here can sometimes resolve issues. - Increase the resources available to your workers (if using the Celery executor) or scheduler (if using the local executor).

- If you're using the Kubernetes executor and a task fails very quickly (in less than 15 seconds), the pod running the task spins down before the webserver has a chance to collect the logs from the pod. If possible, try building in some wait time to your task depending on which operator you're using. If that isn't possible, try to diagnose what could be causing a near-immediate failure in your task. This is often related to either lack of resources or an error in the task configuration.

- Increase the CPU or memory for the task.

- Ensure that your logs are retained until you need to access them. If you are an Astronomer customer see our documentation on how to View logs.

- Check your scheduler and webserver logs for any errors that might indicate why your task logs aren't appearing.

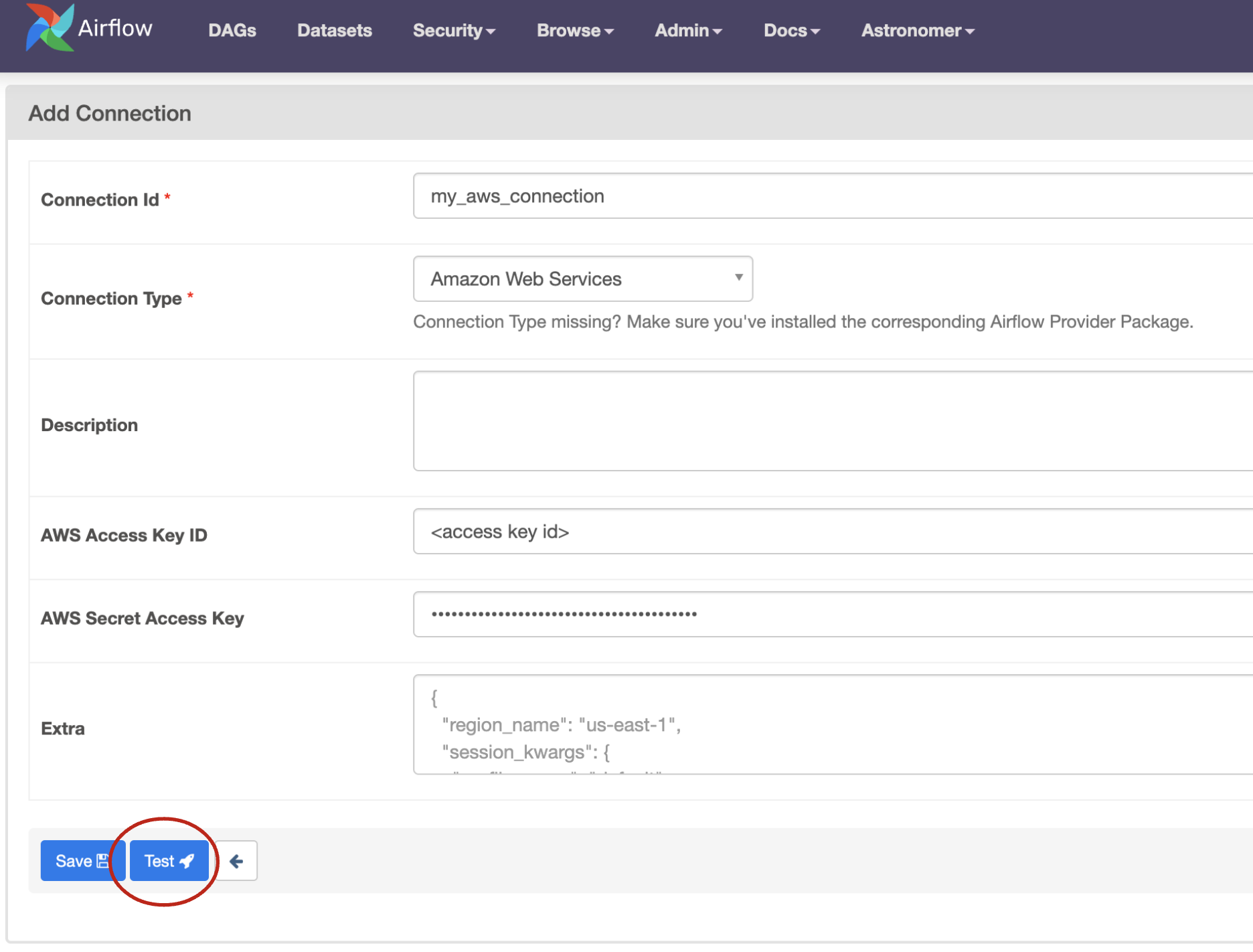

Troubleshooting connections

Typically, Airflow connections are needed to allow Airflow to communicate with external systems. Most hooks and operators expect a defined connection parameter. Because of this, improperly defined connections are one of the most common issues Airflow users have to debug when first working with their DAGs.

While the specific error associated with a poorly defined connection can vary widely, you will typically see a message with "connection" in your task logs. If you haven't defined a connection, you'll see a message such as 'connection_abc' is not defined.

The following are some debugging steps you can try:

-

Review Manage connections in Apache Airflow to learn how connections work.

-

Make sure you have the necessary provider packages installed to be able to use a specific connection type.

-

Change the

<external tool>_defaultconnection to use your connection details or define a new connection with a different name and pass the new name to the hook or operator. -

Define connections using Airflow environment variables instead of adding them in the Airflow UI. Make sure you're not defining the same connection in multiple places. If you do, the environment variable takes precedence.

-

Test if your credentials work when used in a direct API call to the external tool.

-

Test your connections using the Airflow UI or the Airflow CLI. See Testing connections.

Testing connections is disabled by default in Airflow 2.7+. You can enable connection testing by defining the environment variable AIRFLOW__CORE__TEST_CONNECTION=Enabled in your Airflow environment. Astronomer recommends not enabling this feature until you are sure that only highly trusted UI/API users have "edit connection" permissions.

To find information about what parameters are required for a specific connection:

- Read provider documentation in the Astronomer Registry to access the Apache Airflow documentation for the provider. Most commonly used providers will have documentation on each of their associated connection types. For example, you can find information on how to set up different connections to Azure in the Azure provider docs.

- Check the documentation of the external tool you are connecting to and see if it offers guidance on how to authenticate.

- View the source code of the hook that is being used by your operator.

You can also test connections from within your IDE by using the dag.test() method. See Debug interactively with dag.test() and How to test and debug your Airflow connections.

I need more help

The information provided here should help you resolve the most common issues. If your issue was not covered in this guide, try the following resources:

- If you are an Astronomer customer contact our customer support.

- Post your question to Stack Overflow, tagged with

airflowand other relevant tools you are using. Using Stack Overflow is ideal when you are unsure which tool is causing the error, since experts for different tools will be able to see your question. - Join the Apache Airflow Slack and open a thread in

#newbie-questionsor#troubleshooting. The Airflow slack is the best place to get answers to more complex Airflow specific questions. - If you found a bug in Airflow or one of its core providers, please open an issue in the Airflow GitHub repository. For bugs in Astronomer open source tools please open an issue in the relevant Astronomer repository.

To get more specific answers to your question, include the following information in your question or issue:

- Your method for running Airflow (Astro CLI, standalone, Docker, managed services).

- Your Airflow version and the version of relevant providers.

- The full error with the error trace if applicable.

- The full code of the DAG causing the error if applicable.

- What you are trying to accomplish in as much detail as possible.

- What you changed in your environment when the problem started.